What’s pagination | Finest practices when implementing pagination

[ad_1]

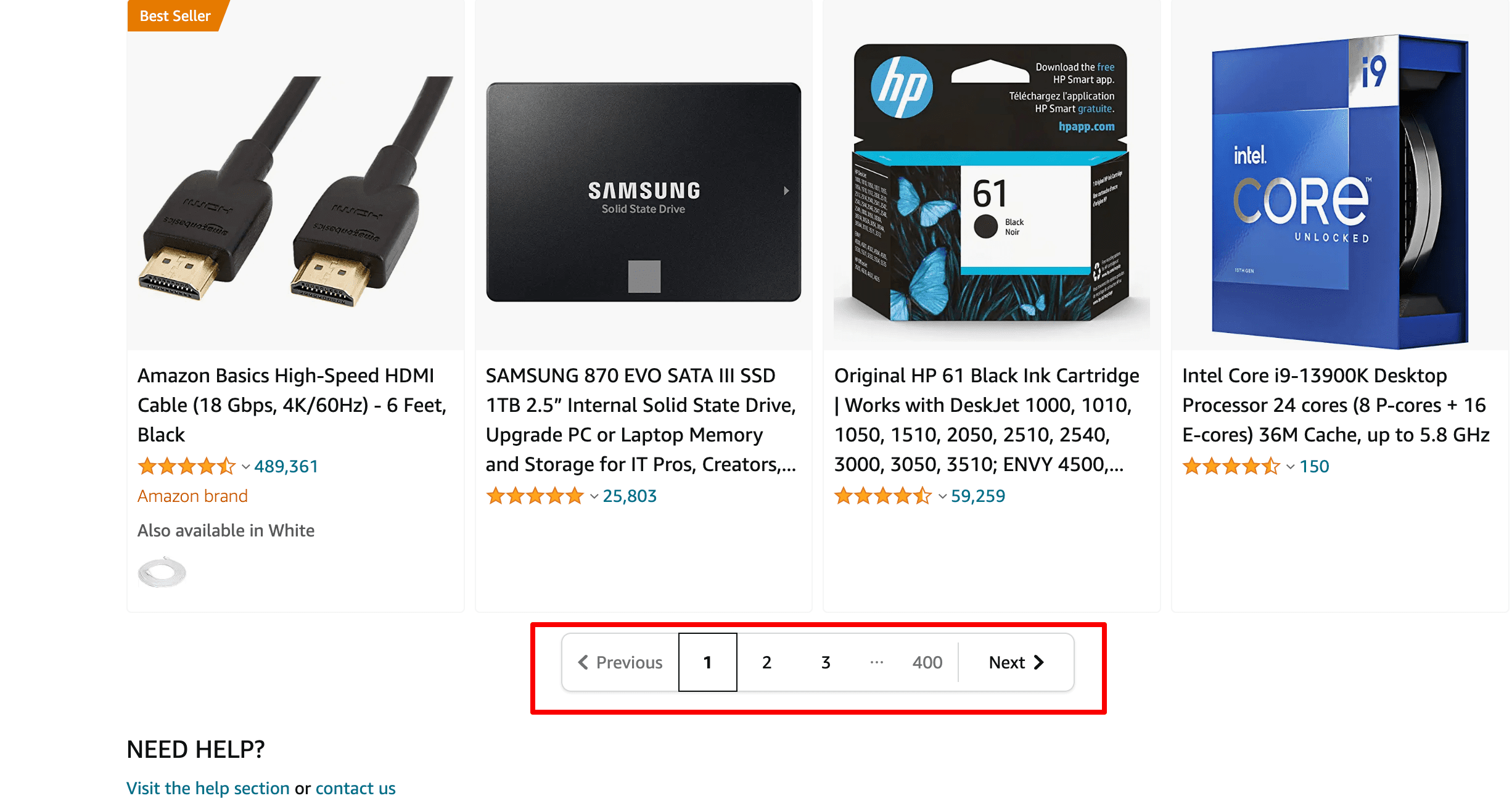

Pagination is the numbering of pages in ascending order. Web site pagination turns out to be useful if you need to cut up content material throughout pages and present an in depth knowledge set in manageable chunks. Web sites with a big assortment and plenty of content material (e.g., e-commerce web sites, information portals and blogs) might have a number of pages for simpler navigation, higher person expertise, the patrons’ journey, and so on.

Numbering is displayed on the prime or backside of the web page and permits customers to maneuver from one group of hyperlinks to a different.

How does pagination have an effect on search engine marketing?

Splitting info throughout a number of pages will increase the web site’s usability. It’s additionally important to implement pagination accurately, as this determines whether or not your vital content material shall be listed. Each the web site’s usability and indexing straight have an effect on its search engine visibility.

Let’s take a more in-depth have a look at these components.

Web site usability

Serps attempt to indicate probably the most related and informative outcomes on the prime of SERPs and have many standards for evaluating the web site’s usability, in addition to its content material high quality. Pagination impacts:

One of many oblique indicators of content material high quality is the time customers spend on the web site. The extra handy it’s for customers to maneuver between paginated content material, the extra time they spend in your website.

Web site pagination makes it simpler for customers to search out the knowledge they’re searching for. Customers instantly perceive the web site construction and might get to the specified web page in a single click on.

In line with Google, paginated content material may help you enhance web page efficiency (which is a Google Search rating sign). And right here’s why. Paginated pages load sooner than all outcomes directly. Plus, you enhance backend efficiency by decreasing the amount of content material retrieved from databases.

Crawling and indexing

If you’d like paginated content material to seem on SERPs, take into consideration how bots crawl and index pages:

Google should make sure that all web site pages are utterly distinctive: duplicate content material poses indexing issues. Crawlers understand paginated pages as separate URLs. On the identical time, it is extremely probably that these pages might include related or similar content material.

The search engine bot has an allowance for what number of pages it could actually crawl throughout a single go to to the positioning. Whereas Google bots are busy crawling quite a few paginated pages, they won’t be visiting different pages, in all probability extra vital URLs. Consequently, vital content material could also be listed later or not listed in any respect.

search engine marketing options to paginated content material

There are a number of methods to assist serps perceive that your paginated content material shouldn’t be duplicated and to get it to rank properly on SERPs.

Index all paginated pages and their content material

For this methodology, you’ll have to optimize all paginated URLs in response to search engine pointers. This implies it’s best to make paginated content material distinctive and set up connections between URLs to offer clear steerage to crawlers on the way you need them to index and show your content material.

- Anticipated consequence: All paginated URLs are listed and ranked in serps. This versatile choice will work for each quick and lengthy pagination chains.

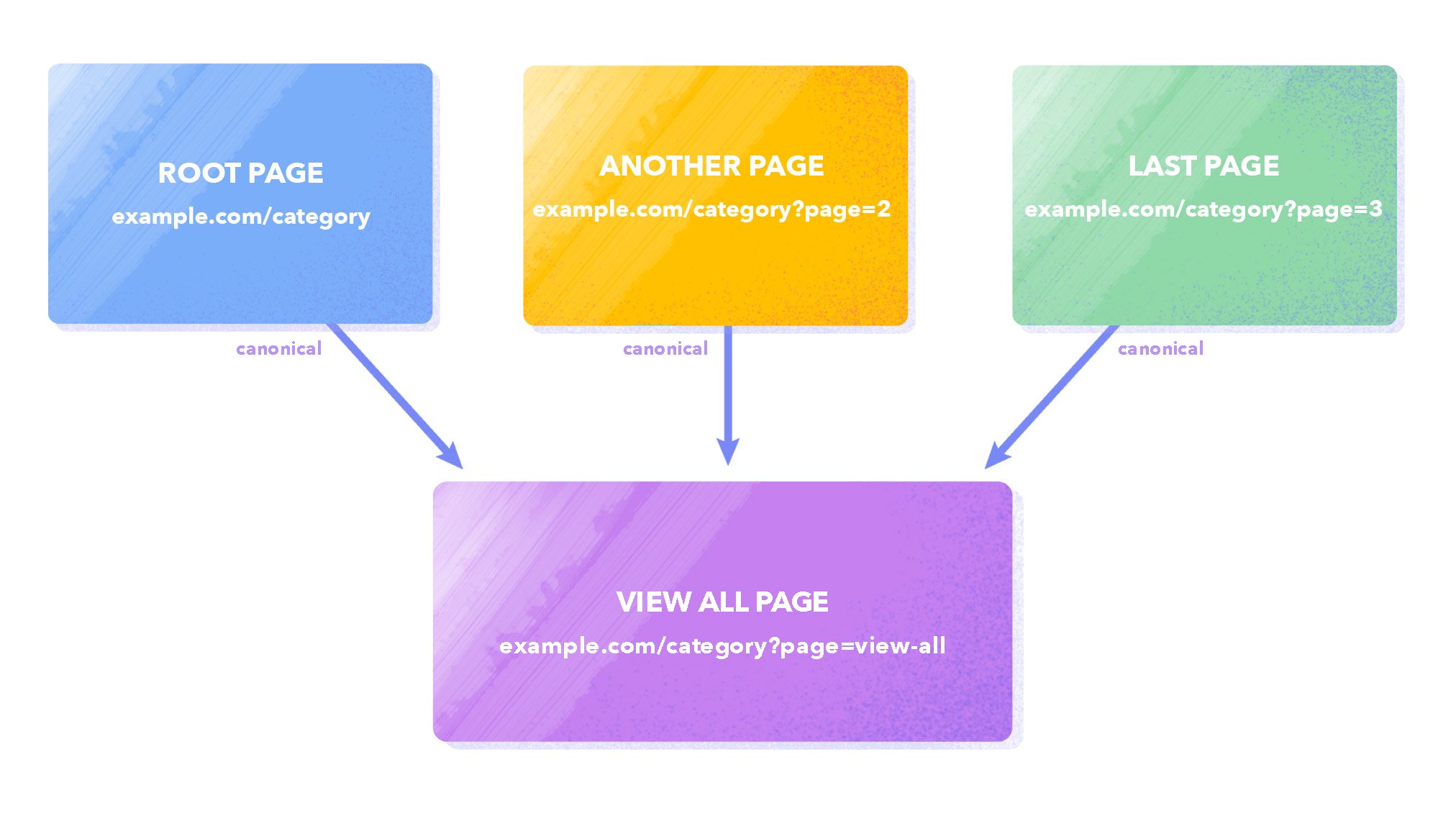

Index the View all web page solely

One other strategy is to canonicalize the View All web page (the place all merchandise/weblog posts, feedback, and so on. are displayed). It is advisable to add a canonical hyperlink pointing to the View all web page to every paginated URL. The canonical hyperlink indicators to serps to contemplate the web page’s precedence for indexing. On the identical time, the crawler can scan by way of all of the hyperlinks of non-canonical pages (if these pages don’t block indexing by search engine crawlers). This manner, you point out that non-primary pages like web page=2/3/4 don’t must be listed however may be adopted.

Right here is an instance of the code you must add to every paginated web page:

<hyperlink href="http://website.com/canonical-page" rel="canonical" />

- Anticipated consequence: This methodology is appropriate for small web site classes which have, for example, three or 4 paginated pages. If there are extra pages, this selection is not going to work properly since loading a considerable amount of knowledge on one web page can negatively have an effect on its velocity.

Forestall paginated content material from being listed by serps

A traditional methodology to resolve pagination points is utilizing a robots noindex tag. The concept is to exclude all paginated URLs from the index besides the primary one. This protects the crawling price range to let Google index your important URLs. It is usually a easy method to cover duplicate pages.

One choice is to limit entry to paginated content material by including a directive to your robots.txt file:

Disallow: *web page=

Nonetheless, because the robots.txt file is only a set of suggestions for crawlers, you possibly can’t drive them to look at any instructions. Due to this fact, it’s higher to dam pages from indexing with the assistance of the robots meta tag.

To do that, add <meta title= “robots” content material= “noindex”> to the <head> part of all paginated pages however the root one.

The HTML code will appear to be this:

<!DOCTYPE html> <html><head> <meta title="robots" content material="noindex"> (…) </head> <physique>(…)</physique> </html>

- Anticipated consequence: this methodology is appropriate for giant web sites with a number of sections and classes. For those who’re going to observe this methodology, then it’s essential to have a well-optimized XML sitemap. One of many cons is that you’re more likely to have points with indexing product pages featured on paginated URLs which can be closed from Googlebot.

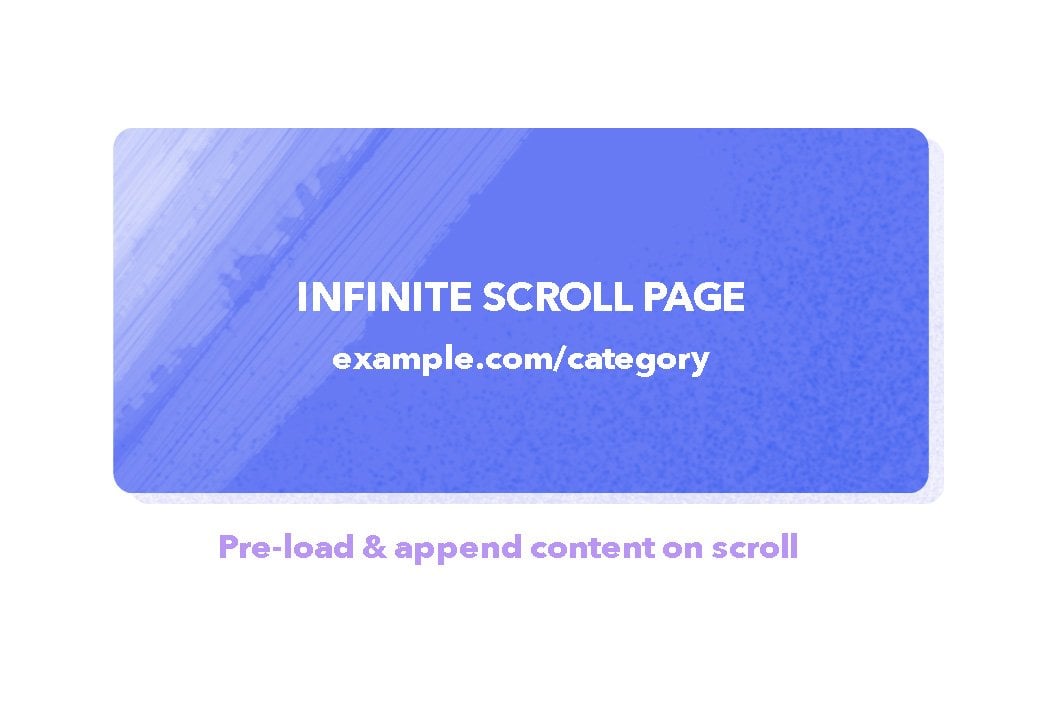

Infinite scrolling

You’ve in all probability come throughout limitless scrolling of products on e-commerce web sites, the place new merchandise are always added to the web page if you scroll to the underside of the display screen. Such a person expertise known as single-page content material. Some consultants choose the Load extra strategy. On this methodology, in distinction to infinite scrolling, content material is loaded utilizing a button that customers can click on so as to prolong an preliminary set of displayed outcomes.

Load extra and infinite scroll are usually applied utilizing the Ajax load methodology (JavaScript).

In line with Google suggestions, in case you are implementing an infinite scroll and Load extra expertise, you must help paginated loading, which assists with person engagement and content material sharing. To do that, present a singular hyperlink to every part that customers can click on, copy, share and cargo straight. The search engine recommends utilizing the Historical past API to replace the URL when the content material is loaded dynamically.

- Anticipated consequence: As content material routinely hundreds upon scroll, it retains the customer on the web site for longer. However there are a number of disadvantages. First, the person can’t bookmark a specific web page to return and discover it later. Second, infinite scrolling could make the footer inaccessible as new outcomes are regularly loaded in, always pushing down the footer. Third, the scroll bar doesn’t present the precise shopping progress and will trigger scrolling fatigue.

Widespread pagination errors and how one can detect them

Now, let’s discuss pagination points, which may be detected with particular instruments.

1. Points with implementing canonical tags.

As we stated above, canonical hyperlinks are used to redirect bots to precedence URLs for indexing. The rel=”canonical” attribute is positioned throughout the <head> part of pages and defines the primary model for duplicate and related pages. In some circumstances, the canonical hyperlink is positioned on the identical web page it results in, rising the probability of this URL being listed.

If the canonical hyperlinks aren’t arrange accurately, then the crawler might ignore directives for the precedence URL.

2. Combining the canonical URL and noindex tag in your robots meta tag.

By no means combine noindex tag and rel=canonical, as they’re contradictory items of data for Google. Whereas rel=canonical signifies to the search engine the prioritized URL and sends all indicators to the primary web page, noindex merely tells the crawler to not index the web page. However on the identical time, noindex is a stronger sign for Google.

If you’d like the URL to not be listed and nonetheless level to the canonical web page, use a 301 redirect.

3. Blocking entry to the web page with robots.txt and utilizing canonical tag concurrently.

We described an identical mistake above: some specialists block entry to non-canonical pages in robots.txt.

Consumer-agent: * Disallow: /

However you shouldn’t achieve this. In any other case, the bot gained’t be capable of crawl the web page and gained’t contemplate the added canonical tag. This implies the crawler is not going to perceive which web page is canonical.

Instruments for locating search engine marketing pagination points on a web site

Webmaster instruments can shortly detect points associated to web site optimization, together with pagination.

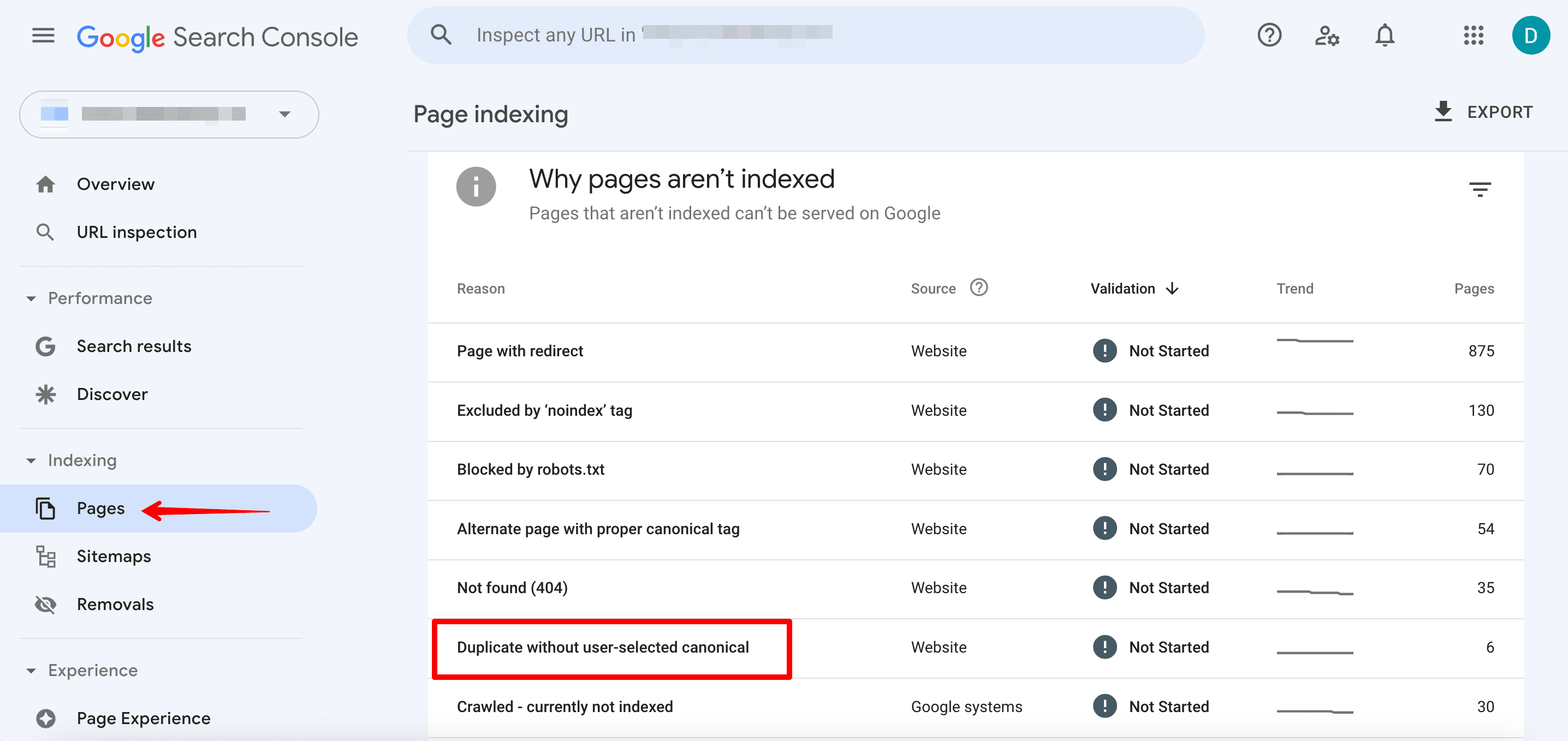

Google Search Console

The Non-indexed tab of the Pages part shows all of the non-indexed URLs. Right here, you possibly can see which web site pages have been recognized by the search engine as duplicates.

It’s price being attentive to the next studies:

- Duplicate with out user-selected canonical & Duplicate

- Google selected completely different canonical than person

There, you’ll see knowledge on issues with implementing canonical tags. It means Google has not been in a position to decide which web page is the unique/canonical model. It might additionally imply that the precedence URL chosen by the webmaster doesn’t match the URL really useful by Google.

search engine marketing instruments for in-depth web site audit

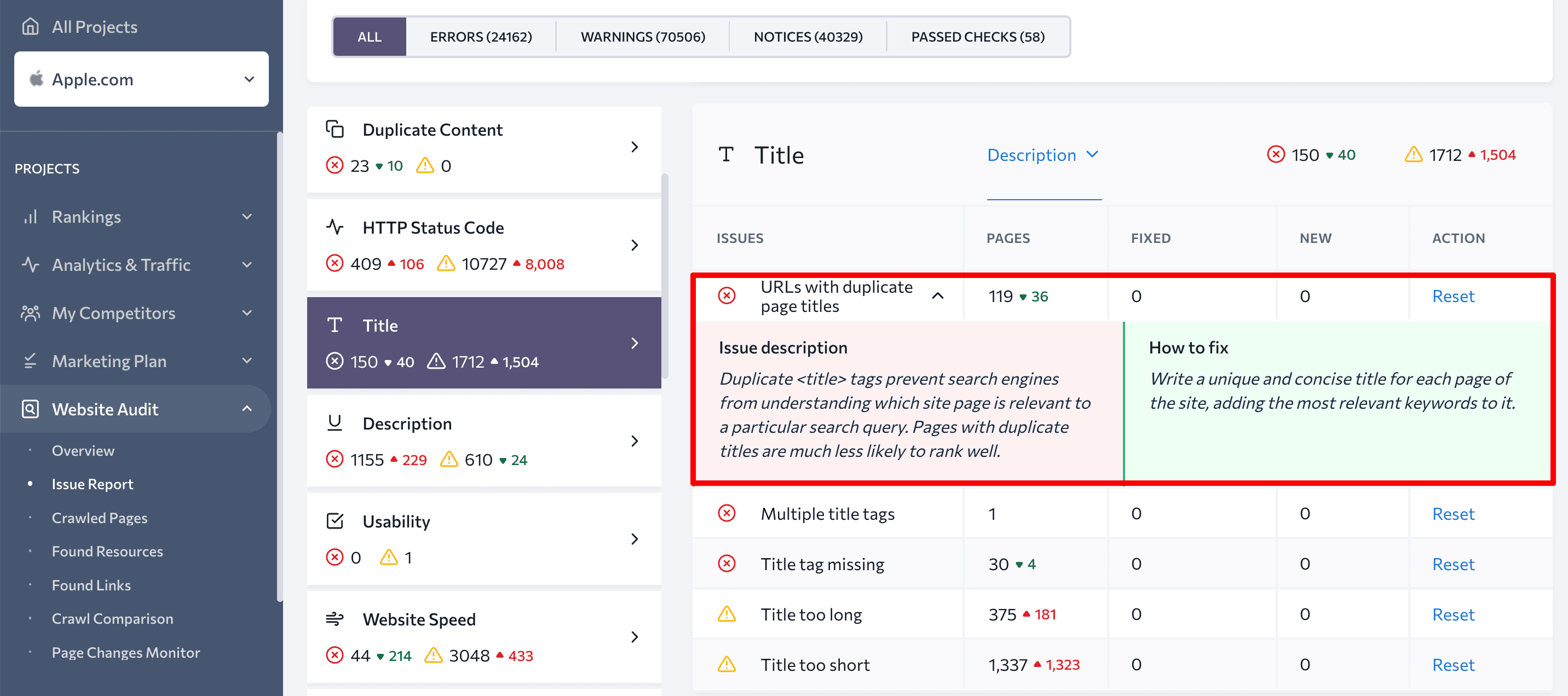

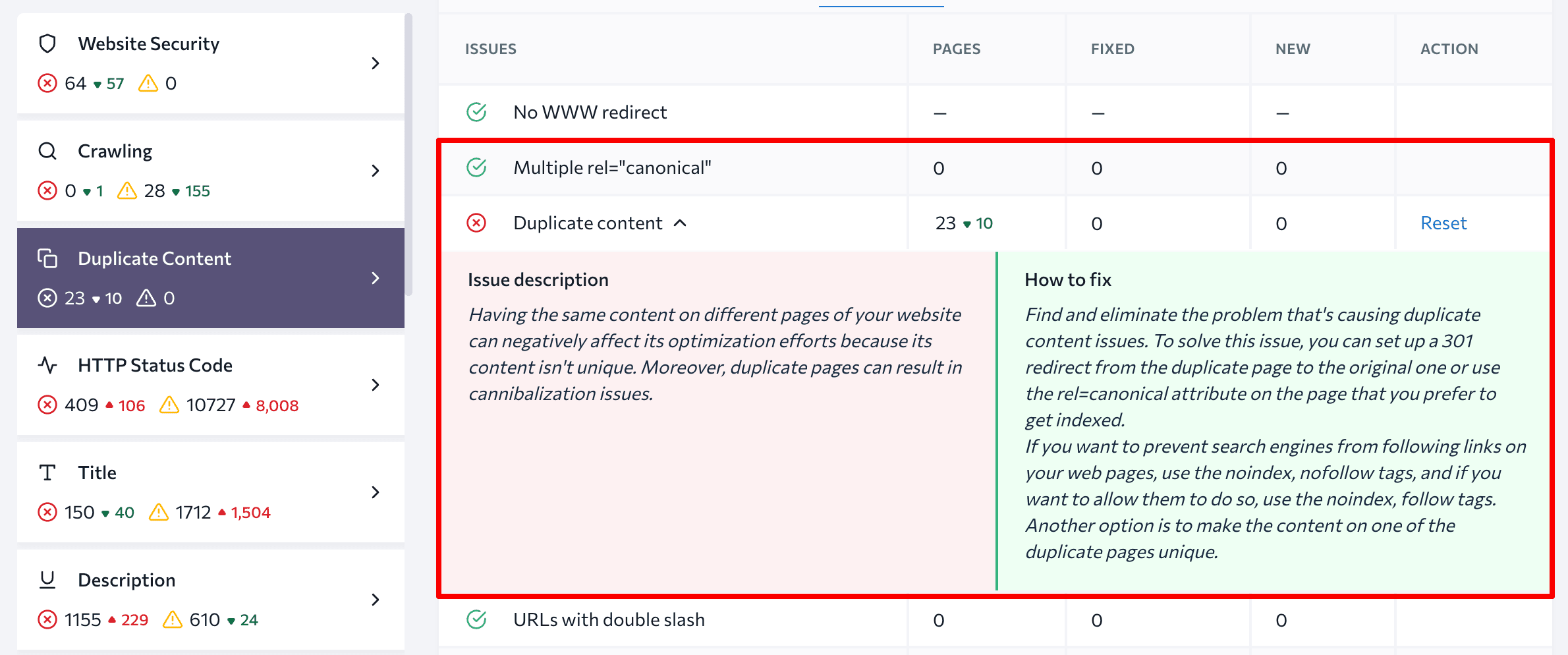

Particular instruments may help you carry out a complete web site audit for all technical parameters. SE Rating’s Web site Audit checks greater than 120 parameters and supplies tips about how one can deal with points that influence your website’s efficiency.

This instrument helps establish all points associated to web site pagination, together with duplicate content material and canonical URLs.

Moreover, the Web site Audit instrument will level out any title, description and H1 duplicates, which may be a problem with paginated URLs.

How do you optimize paginated content material?

Let’s break down how one can arrange search engine marketing pagination relying in your chosen strategy.

Objective 1: Index all paginated pages

Having duplicated titles, descriptions, and H1s for paginated URLs shouldn’t be an enormous drawback. That is widespread observe:

Nonetheless, when you select to index all of your paginated URLs, it’s higher to make these web page components distinctive.

Easy methods to arrange search engine marketing pagination:

1. Give every web page a singular URL.

If you’d like Google to deal with URLs which can be in a paginated sequence as separate pages, use URL nesting on the precept of url/n or embody a ?web page=n question parameter, the place n is the web page quantity within the sequence.

Don’t use URL fragment identifiers (the textual content after a # in a URL) for web page numbers since Google ignores them and doesn’t acknowledge the textual content following the character. If Googlebot sees such a URL, it could not observe the hyperlink, pondering it has already retrieved the web page.

2. Hyperlink your paginated URLs to one another.

To make sure serps perceive the connection between paginated content material, embody hyperlinks from every web page to the next web page utilizing <a href> tags. Additionally, keep in mind so as to add a hyperlink on each web page in a bunch that goes again to the primary web page. It will sign to the search engine which web page is major within the chain.

Up to now, Google used the HTML hyperlink components <hyperlink rel=”subsequent” href=”…”> and <hyperlink rel=”prev” href=”…”> to establish the connection between element URLs in a paginated sequence. Google now not makes use of these tags, though different serps should still use them.

3. Make sure that your pages are canonical.

To make every paginated URL canonical, it’s best to specify the rel=”canonical” attribute within the <head> tag of every web page and add a hyperlink pointing to that web page (self-referencing rel=canonical hyperlink tag methodology).

4. Do On-Web page search engine marketing

To stop any warnings in Google Search Console or every other instrument, attempt deoptimizing paginated web page H1 tags and including helpful textual content and a class picture (with an optimized file title and alt tag) to the foundation web page.

Objective 2: Solely index the View all web page

This technique will enable you to successfully optimize your web page with all of the paginated content material (the place all outcomes are displayed) in order that it could actually rank excessive for vital key phrases.

Easy methods to arrange search engine marketing pagination:

1. Create a web page that features all of the paginated outcomes.

There may be a number of such pages relying on the variety of web site sections and classes for which pagination is finished.

2. Specify the View all web page as canonical.

Each paginated web page’s <head> tag should include the rel= “canonical” attribute directing the crawler to the precedence web page for indexing.

3. Enhance the View all web page loading velocity.

Optimized web site velocity not solely enhances the person expertise however may assist to spice up your search engine rankings. Determine what’s slowing your View all web page down utilizing Google’s PageSpeed Insights. Then, decrease any unfavourable components affecting velocity.

Objective 3: Forestall paginated URLs from indexing

It is advisable to instruct crawlers on how one can index web site pages correctly. Solely paginated content material needs to be closed from indexing. All product pages and different outcomes divided into clusters have to be seen to look engine bots.

Easy methods to arrange search engine marketing pagination:

1. Exclude all paginated pages from indexing besides the primary one.

Keep away from utilizing the robots.txt file for this. It’s higher to use the next methodology:

- Block indexing with the robots meta tag.

You must add the meta tag title=”robots” content material=”noindex, observe” into the <head> part of all of the paginated pages, besides the primary one. This mixture of directives will forestall pages from being listed and nonetheless enable crawlers to observe the hyperlinks these pages have.

2. Optimize the primary paginated web page.

Since this web page needs to be listed, put together it for rating, paying particular consideration to content material and tags.

Last ideas

Pagination is if you cut up up content material into numbered pages, which improves web site usability. For those who do it proper, vital content material will present up the place it ought to.

There are a number of methods to implement search engine marketing pagination on a web site:

- Indexing all of the paginated pages

- Indexing the View All web page solely

- Stopping all paginated pages from being listed, aside from the primary one

Particular instruments may help you detect pagination points and verify when you did it proper. You’ll be able to attempt, for example, the Pages part of the Google Search Console or SE Rating’s Web site Audit for a extra detailed evaluation.

[ad_2]

Source_link