How To Discover and Repair It

[ad_1]

Straightforward to create, laborious to do away with, and intensely dangerous in your web site—that’s duplicate content material in a nutshell. However how badly does duplicate content material truly harm web optimization? What are the technical and non-technical causes behind the existence of duplicate content material? And how are you going to discover and repair it on the spot? Learn on to get the solutions to those key points and extra.

What’s duplicate content material?

Duplicate content material is mainly copy-pasted, recycled (or barely tweaked), cloned or reused content material that brings little to no worth to customers and confuses search engines like google. Content material duplication happens most frequently both inside a single web site or throughout totally different domains.

Having duplicate content material inside a single web site signifies that a number of URLs in your web site are displaying the identical content material (typically unintentionally). This content material normally takes the type of:

- Republished outdated weblog posts with no added worth.

- Pages with equivalent or barely tweaked content material.

- Scraped or aggregated content material from different sources.

- AI-generated pages with poorly rewritten textual content.

Duplicated content material throughout totally different domains means that you’ve got content material that reveals up in multiple place throughout totally different exterior websites. This would possibly appear like:

- Scraped or stolen content material revealed on different websites.

- Content material that’s distributed with out permission

- Similar or barely edited content material on competing websites

- Rewritten articles which might be out there on a number of websites

Does duplicate content material harm web optimization?

The short reply? Sure, it does. However the affect of duplicate content material on web optimization relies upon enormously on the context and tech parameters of the web page you’re coping with.

Having duplicate content material in your web site—say, very comparable weblog posts or product pages—can scale back the worth and authority of that content material, in line with search engines like google. It’s because search engines like google could have a tricky time determining which web page ought to rank increased. To not point out, customers will really feel pissed off if they will’t discover something helpful after touchdown in your web page.

However, if one other web site takes or copies your content material with out permission (which means you’re not syndicating your content material), it seemingly won’t straight hurt your web site’s efficiency or search visibility. So long as your content material is the unique model, it’s of top of the range, and also you make small tweaks to it over time, search engines like google will hold figuring out your pages as such. The duplicate scraper web site would possibly take some site visitors, however they virtually actually received’t outrank your unique web site in SERPs, in line with Google’s explanations.

Google Search Central Weblog

Within the second state of affairs, you might need the case of somebody scraping your content material to place it on a unique web site, typically to attempt to monetize it. It’s additionally widespread for a lot of internet proxies to index elements of web sites which have been accessed by way of the proxy. When encountering such duplicate content material on totally different websites, we take a look at numerous indicators to find out which web site is the unique one, which normally works very effectively. This additionally signifies that you shouldn’t be very involved about seeing destructive results in your web site’s presence on Google should you discover somebody scraping your content material.

How Google approaches duplicate content material

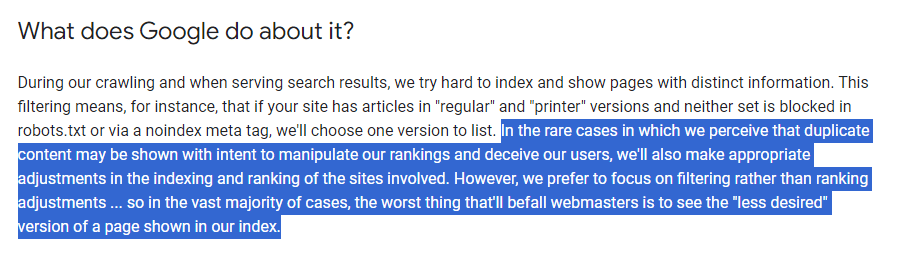

Whereas Google formally says, “There’s no such factor as a “duplicate content material penalty”, there are all the time some buts, which implies you need to learn between the traces. Even when there isn’t any direct penalty, it will probably nonetheless harm your web optimization in oblique methods.

Particularly, Google sees it as a purple flag should you deliberately scrape content material from different websites and republish it with out including any new worth. Google will strive laborious to determine the unique model amongst comparable pages and index that one. All of them will wrestle to rank. Which means that duplicate content material can result in decrease rankings, decreased visibility, and fewer site visitors.

One other “cease” case is while you attempt to create quite a few pages, subdomains, or domains that every one function noticeably comparable content material. This will turn out to be one more reason why your web optimization efficiency declines.

Plus, think about that any search engine is basically a enterprise. And like every enterprise, it doesn’t need to waste its efforts for nothing. Thus, there’s a crawling finances set for a single web site, which is a restrict of internet sources search robots are going to crawl and index. Googlebot’s crawling finances will probably be exhausted sooner if it has to spend extra time and sources on every duplicate web page. This limits its possibilities of reaching the remainder of your content material.

Yet another “cease case” is when you’re reposting affiliate content material from Amazon or different web sites whereas including little in the way in which of distinctive worth. By offering the very same listings, you’re letting Google deal with these duplicate content material points for you. It would then make the required web site indexing and rating changes to the most effective of its skill.

This implies that well-intentioned web site homeowners received’t be penalized by Google in the event that they run into technical issues on their web site, so long as they don’t deliberately attempt to manipulate search outcomes.

Google Search Central Weblog

Duplicate content material on a web site isn’t grounds for motion on that web site except it seems that the intent of the duplicate content material is to be misleading and manipulate search engine outcomes. In case your web site suffers from duplicate content material points, and also you don’t observe the recommendation listed above, we do a superb job of selecting a model of the content material to point out in our search outcomes.

So, should you don’t make duplicate content material on objective, you’re good. Moreover, as Matt Cutts mentioned about how Google views duplicate content material: “One thing like 25 or 30% of all the internet’s content material is duplicate content material.” Like all the time, simply stick with the golden rule: create distinctive and invaluable content material to facilitate higher person experiences and search engine efficiency.

Duplicate and AI-generated content material

One other rising challenge to remember right now is content material created with AI instruments. This will simply turn out to be a content material duplication minefield in case you are not cautious. One factor is evident – AI-generated content material basically pulls collectively info from different locations with out including any new worth. For those who use AI instruments carelessly, simply typing a immediate and copying the output, don’t be stunned if it will get flagged as duplicate content material that ruins your web optimization efficiency. Additionally keep in mind that opponents can enter comparable prompts to provide very comparable content material.

Even when such AI content material could technically cross plagiarism checks (when reviewed by particular instruments), Google is able to figuring out textual content created with little added worth, experience, or unique expertise per their EEAT requirements. Although not a direct penalty, simply know that utilizing solely AI-generated content material could make it tougher in your content material to carry out effectively in searches as its repetitive nature turns into noticeable over time.

To keep away from duplicate content material and web optimization points, it’s important to make sure that all content material on an internet site is exclusive and invaluable. This may be achieved by creating unique content material, correctly utilizing canonical tags, and avoiding content material scraping or different black hat web optimization techniques.

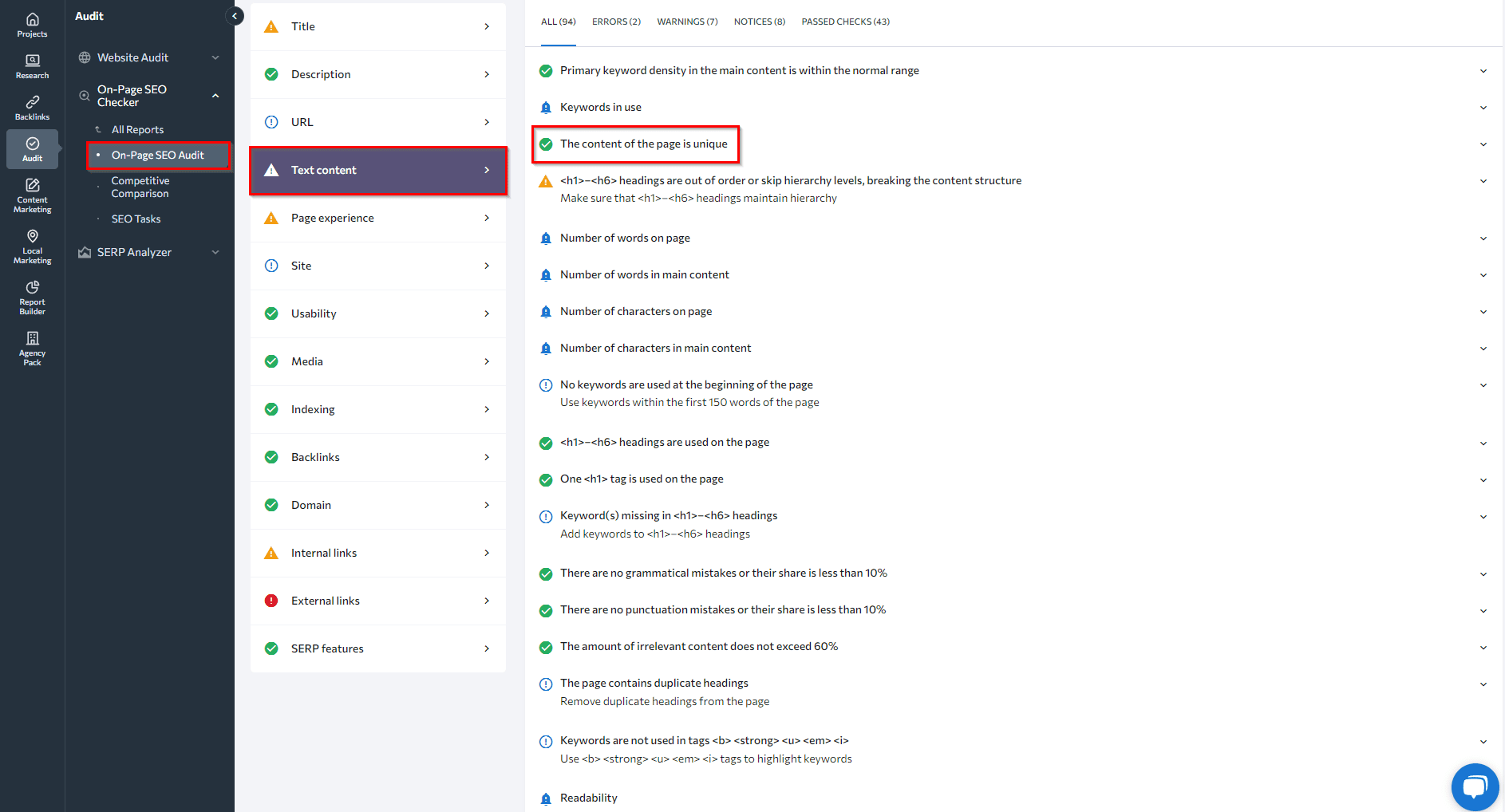

For example, utilizing SE Rating’s On-Web page web optimization device, you may carry out a complete evaluation of content material uniqueness, key phrase density, the phrase depend compared to top-ranking competitor pages, in addition to the usage of headings on the web page. Moreover the content material itself, this device analyzes key on-page parts like title tags, meta descriptions, heading tags, inner hyperlinks, URL construction, and key phrases. Thus, by leveraging this device, you may produce content material that’s each distinctive and invaluable.

Kinds of duplicate content material

There are two sorts of duplicate content material points within the web optimization areas:

- Website-wide/Cross-domain Duplicate Content material

This happens when the identical or very comparable content material is revealed throughout a number of pages of a web site or throughout separate domains. For example, a web based retailer would possibly use the identical product descriptions on the principle retailer.com, m.retailer.com, or localized area model retailer.ca, resulting in content material duplication. If the duplicate content material spans throughout two or extra web sites, it is a larger challenge that may require a unique answer.

- Copied Content material/Technical Points

Duplicate content material can come up from straight copying content material to a number of locations or technical issues inflicting the identical content material to point out up at a number of totally different URLs. Examples embrace lack of canonical tags on URLs with parameters, duplicate pages with out the noindex directive, and copied content material that will get revealed with out correct redirection. With out correct setup of canonical tags or redirects, search engines like google could index and attempt to rank near-identical variations of pages.

Learn how to test for duplicate content material points

To begin, let’s outline the totally different strategies for detecting duplicate content material issues. For those who’re specializing in points inside one area, you should utilize:

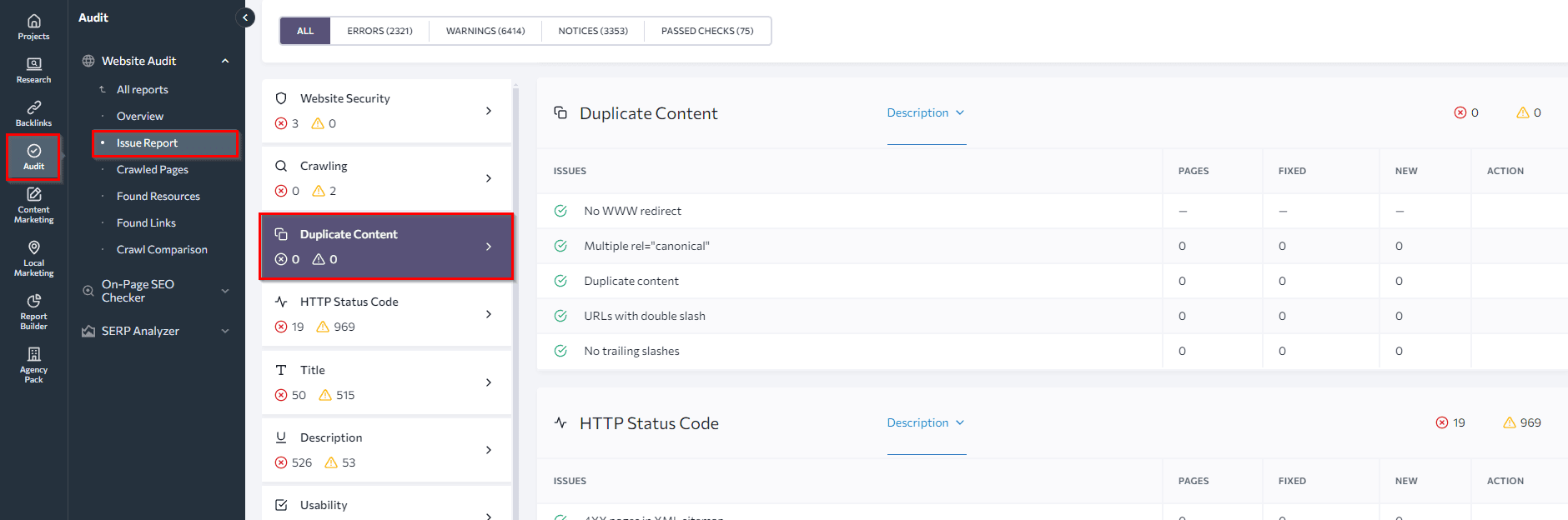

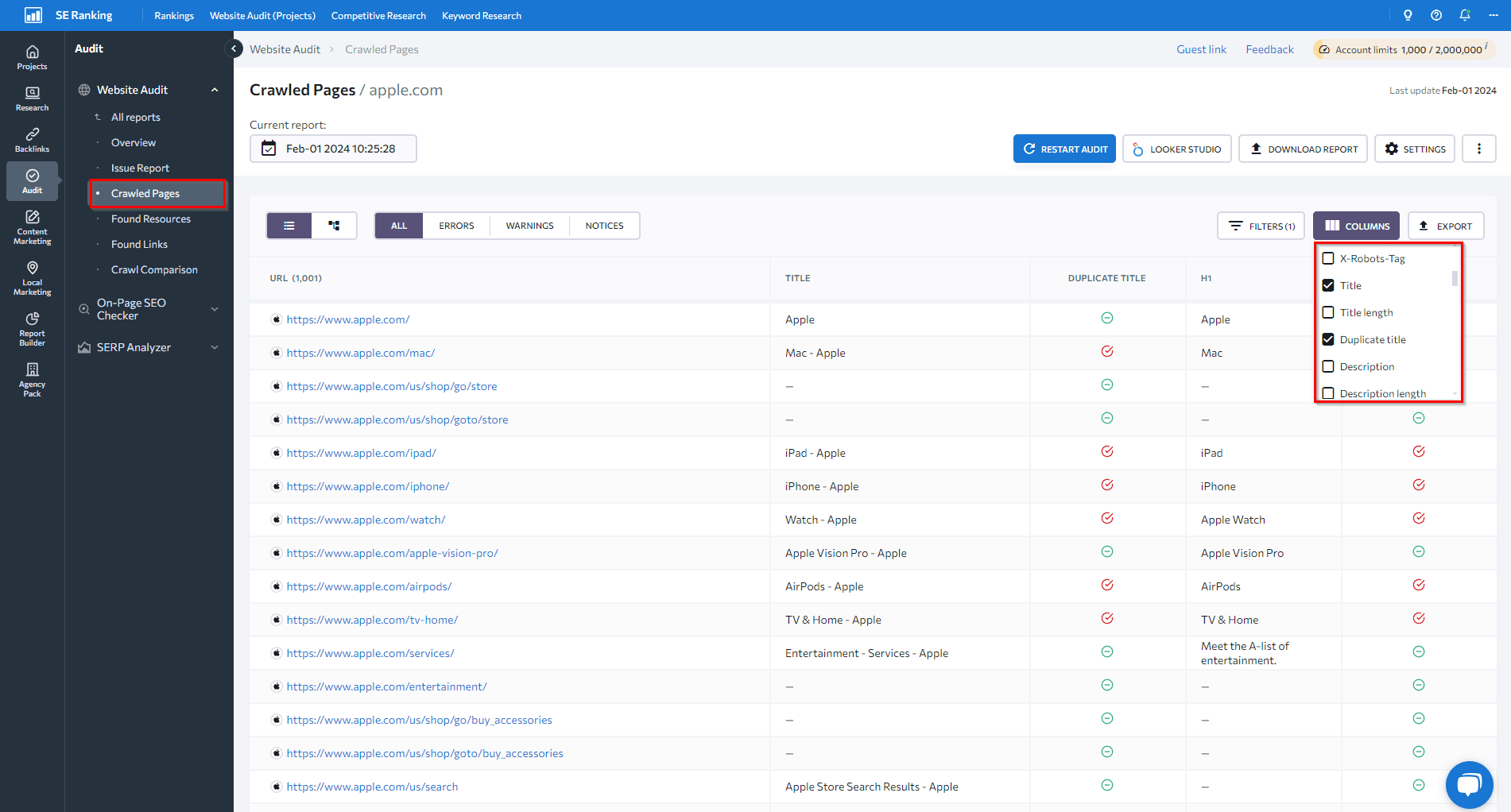

SE Rating’s Web site web optimization Audit device. This device may also help you discover all internet pages of your web site, together with duplicates. Within the Duplicate Content material part of our Web site Audit device, you’ll discover a checklist of pages that include the identical content material on account of technical causes: URLs accessible with and with out www, with and with out slash symbols, and so on. For those who’ve used canonical to resolve the duplication drawback however occur to specify a number of canonical URLs, the audit will spotlight this error as effectively.

Subsequent, take a look at the Crawled Pages tab under. You could find extra indicators of duplicate content material points by pages with comparable titles or heading tags. To get an summary, arrange the columns you need to see.

To search out the most effective device in your wants, discover SE Rating’s audit device and evaluate it with different web site audit instruments.

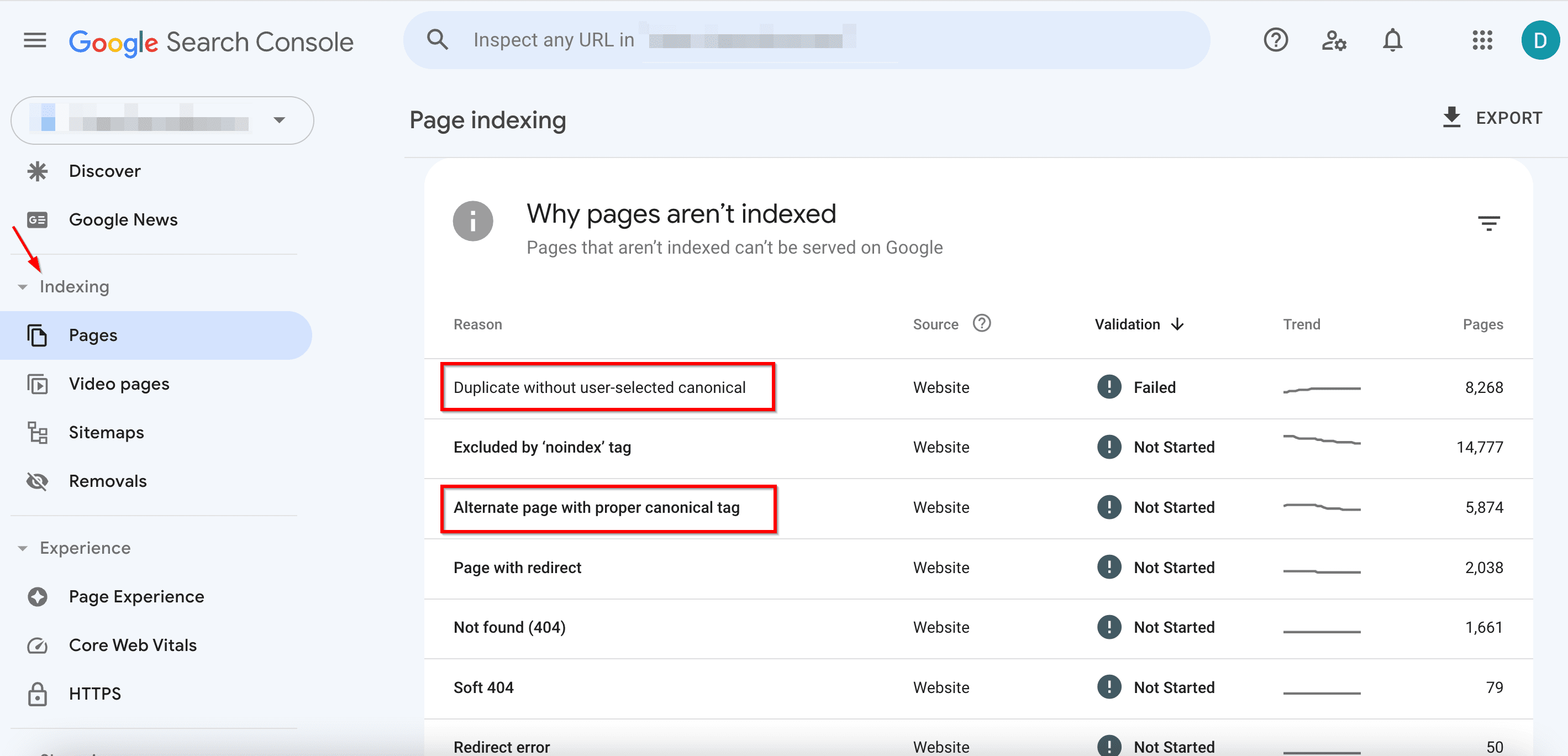

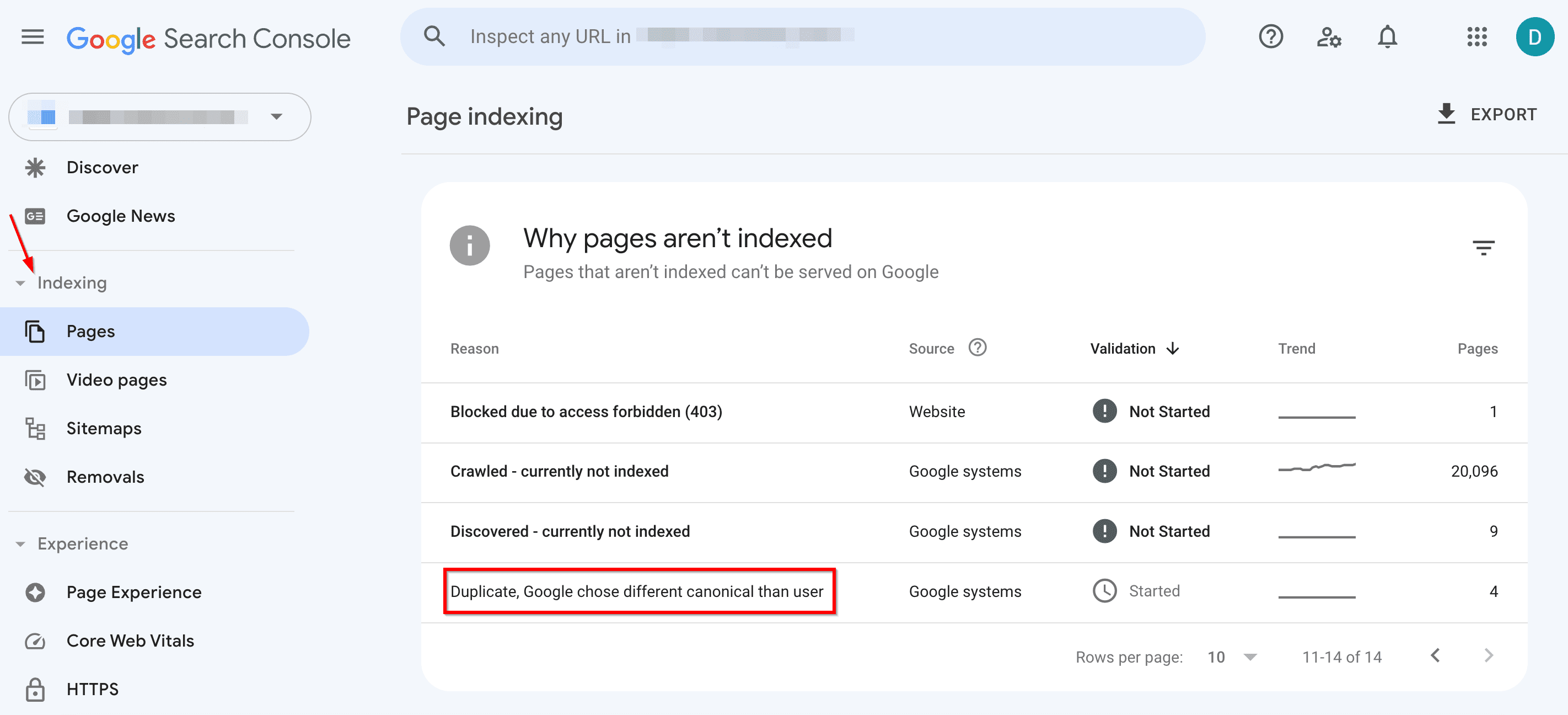

Google Search Console can even assist you to discover equivalent content material.

- Within the Indexing tab, go to Pages.

- Take note of the next points:

- Duplicate with out user-selected canonical: Google discovered duplicate URLs and not using a most well-liked model set. Use the URL Inspection device to seek out out which URL Google thinks is canonical for this web page. Now, this isn’t an error. It occurs as a result of Google prefers to not present the identical content material twice. However should you suppose Google canonicalized the incorrect URL mark the canonical extra explicitly. Or, should you don’t suppose this web page is a replica of the canonical URL chosen by Google, be certain that the 2 pages have clearly distinct content material.

- Alternate web page with correct canonical tag: Google sees this web page as an alternate of one other web page. It may very well be an AMP web page with a desktop canonical, a cell model of a desktop canonical, or vice versa. This web page hyperlinks to the right canonical web page, which is listed, so that you don’t want to alter something. Remember the fact that Search Console doesn’t detect alternate language pages.

- Duplicate, Google selected totally different canonical than person: Google marks this web page because the canonical for a gaggle of pages, but it surely suggests {that a} totally different URL would function a extra applicable canonical. Google doesn’t index this web page itself; as a substitute, it indexes the one it considers canonical.

Choose one of many strategies listed under to seek out duplication points throughout totally different domains.

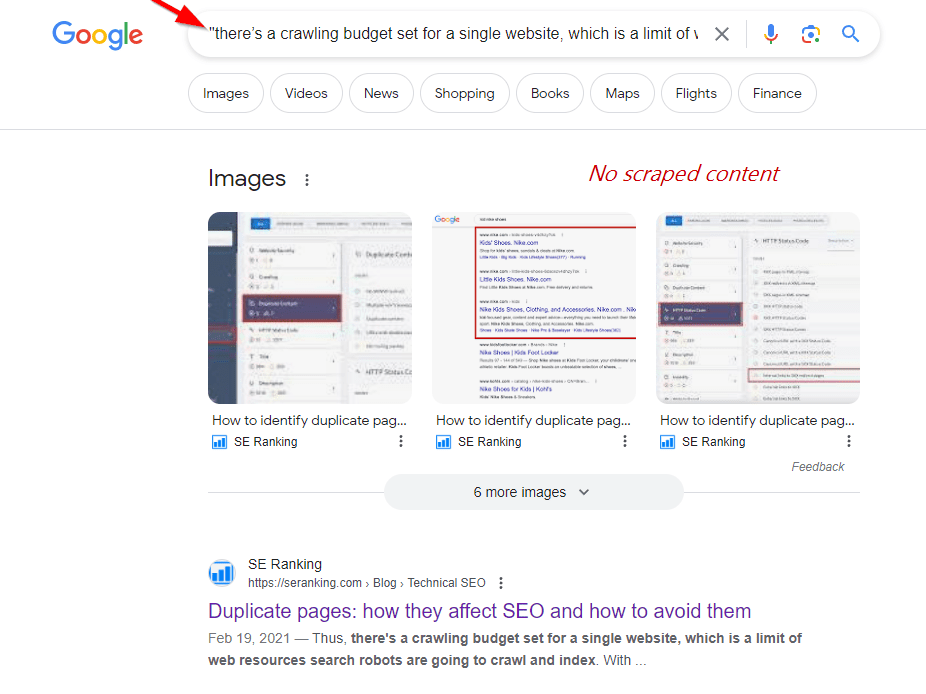

- Use Google Search: Search operators may be fairly helpful. Attempt to google duplicate content material utilizing a snippet of textual content out of your web page in quotes. That is helpful as a result of scraped or syndicated content material can outrank your unique content material.

- Use On-Web page Checker to look at content material on particular URLs: This intensifies your handbook content material audit efforts. It makes use of 94 key parameters to evaluate your web page, together with content material uniqueness.

To do that, scroll right down to the Textual content Content material tab throughout the On-Web page web optimization Checker. It would present you in case your content material uniqueness scores throughout the beneficial degree.

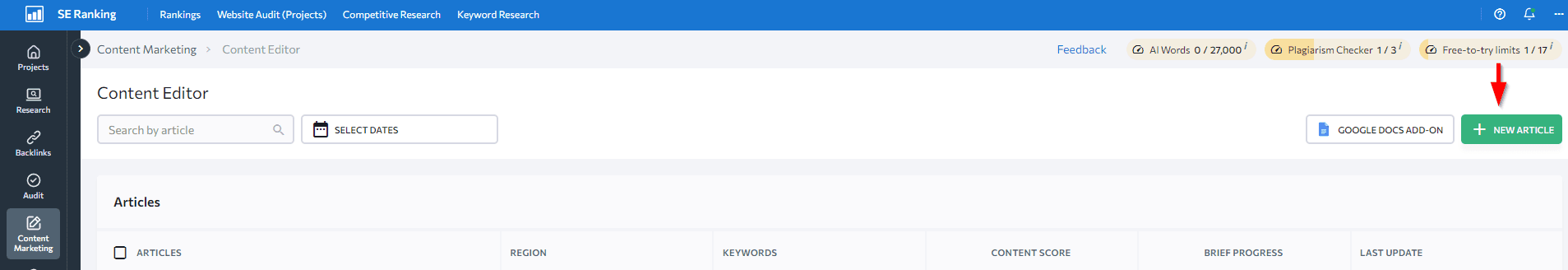

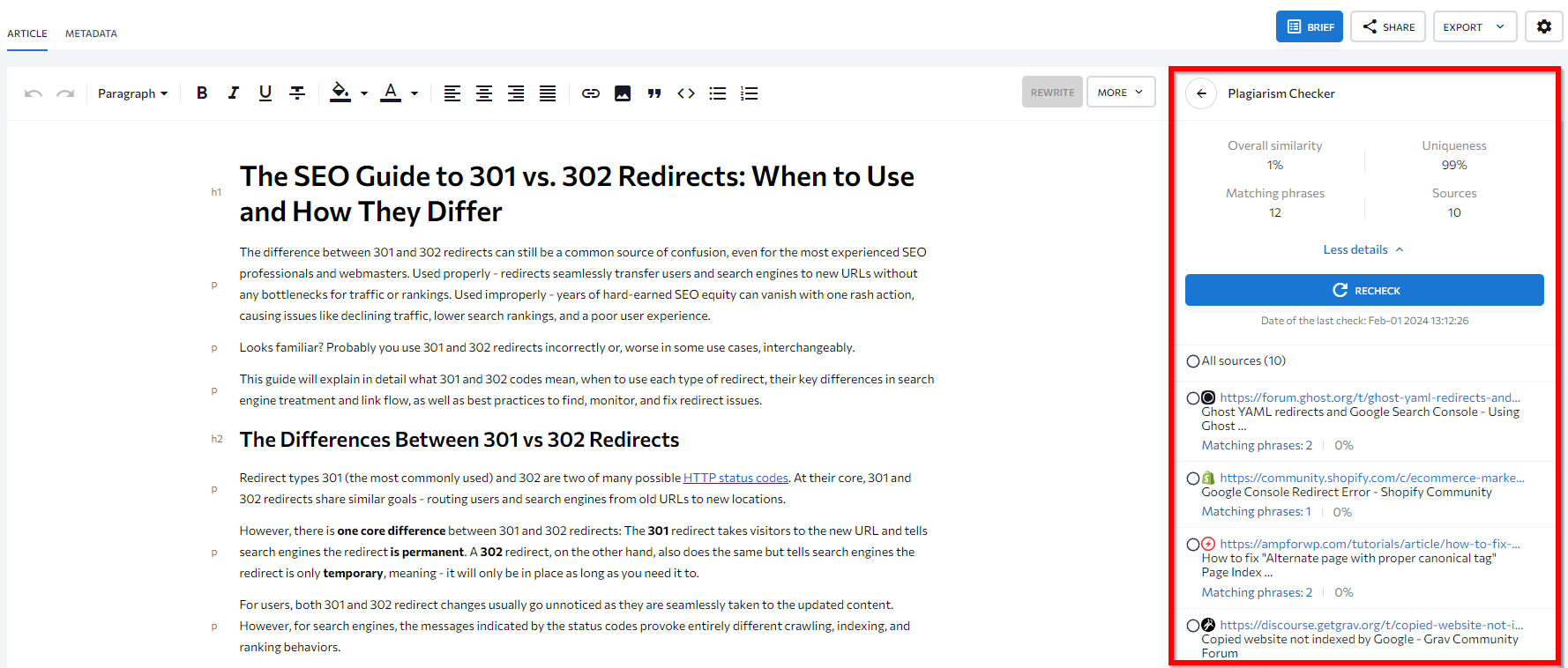

- Use SE Rating’s AI-powered Content material Editor device to avoid wasting time. It includes a built-in Plagiarism Checker that scans your content material and runs it by way of an enormous database to verify that it’s the unique (not copied) model. It reveals the proportion of matching phrases, how distinctive the textual content is, the variety of pages with matches, and the distinctive matching phrases throughout all opponents.

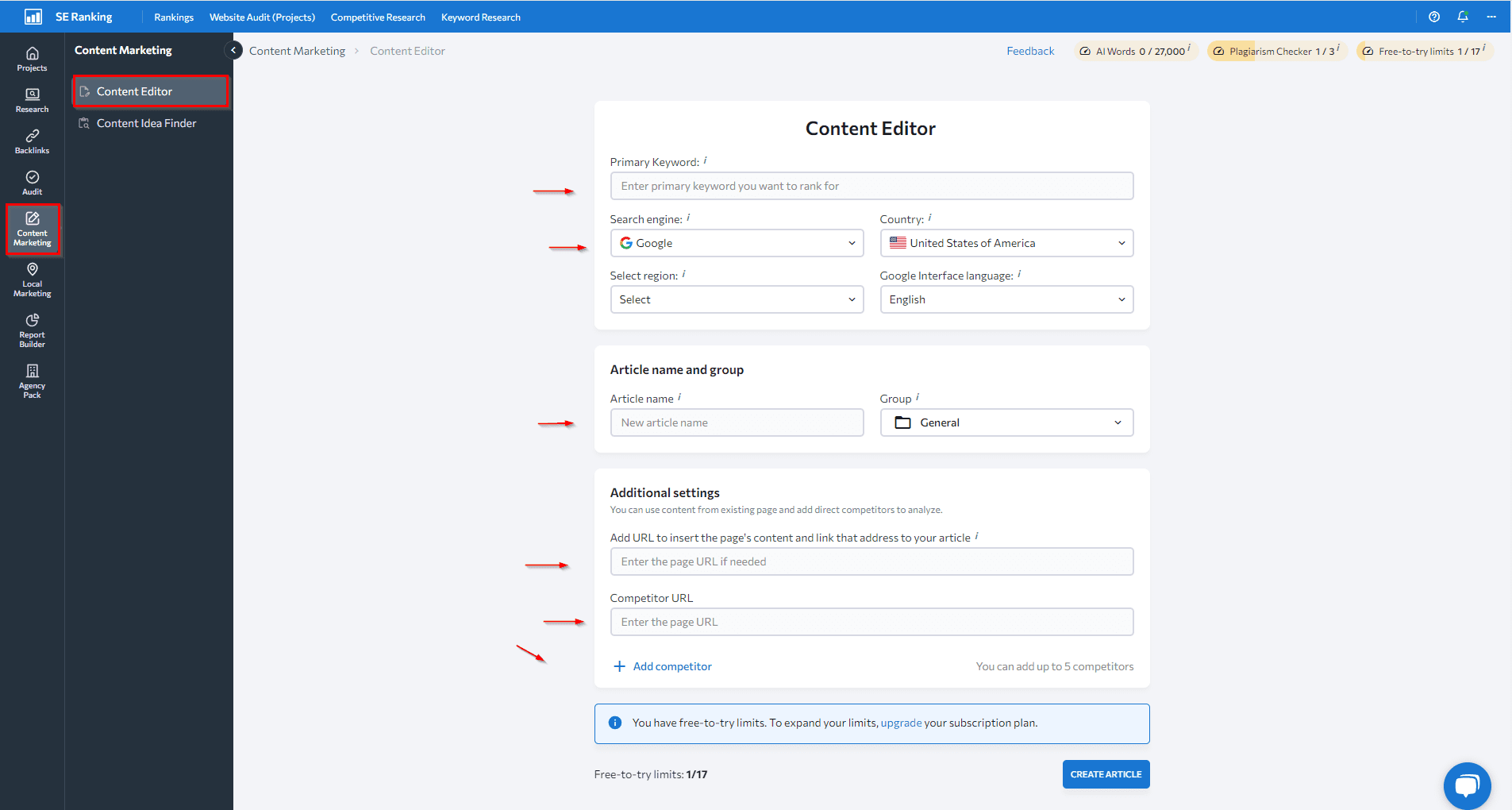

To begin, discover the Content material Editor throughout the SE Rating platform, click on on the “New article” button, and specify the small print of your article.

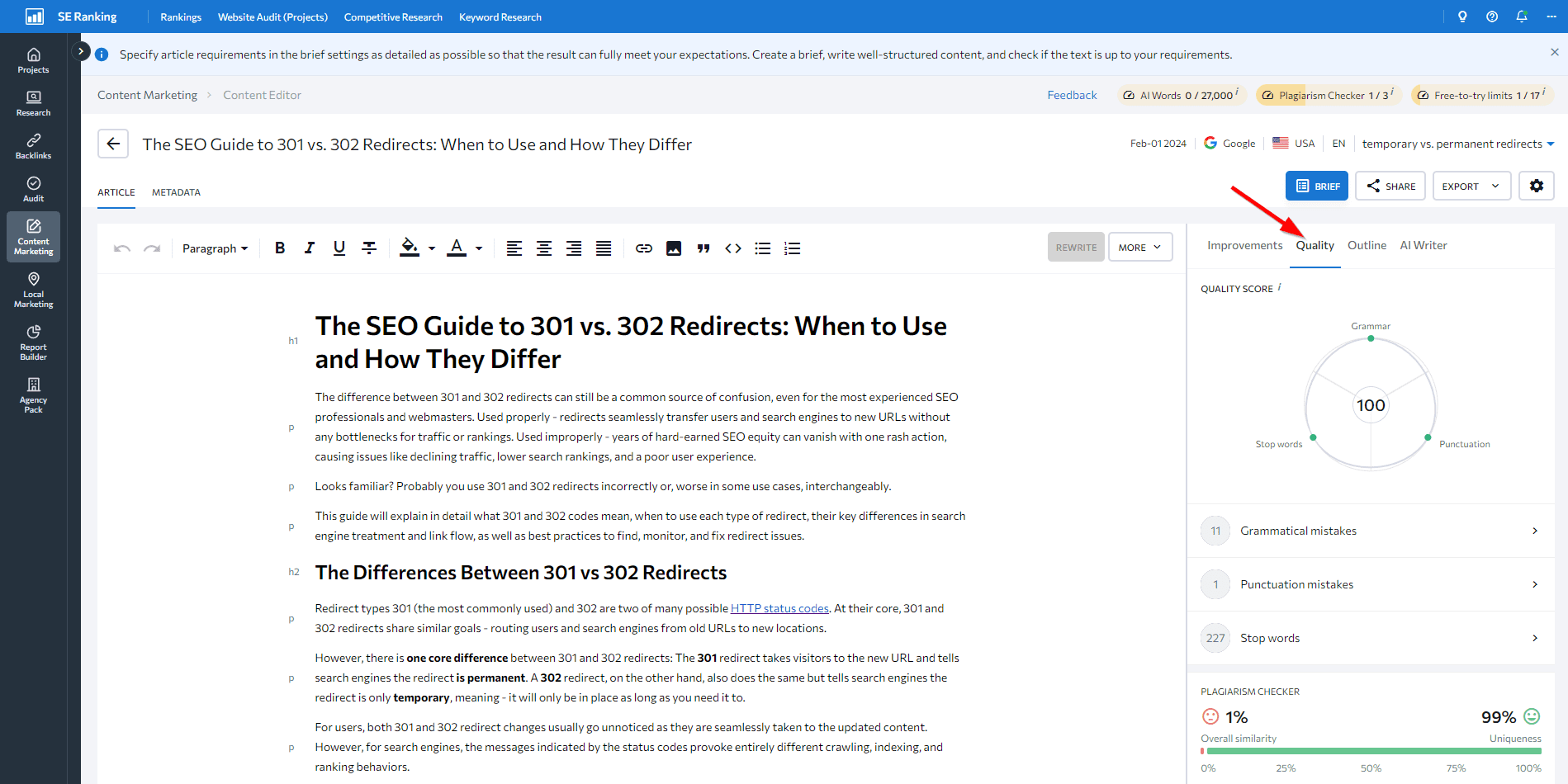

Add your article and open the High quality tab in the appropriate sidebar.

Scroll right down to Plagiarism Checker and see the small print.

The commonest technical causes for duplicate content material

As beforehand famous, unintentional duplicate content material in web optimization is widespread, primarily as a result of oversight of sure technical components. Under is an inventory of those points and the right way to repair them.

URL parameters

Duplicate content material usually occurs on web sites when the identical or very comparable content material is accessible throughout a number of URLs. Listed here are two widespread technical methods for this to occur:

1. Filtering and sorting parameters: Many websites use URL parameters to assist customers filter or kind content material. This may end up in a number of pages with the identical or comparable content material however with slight parameter variations. For instance, www.retailer.com/shirts?colour=blue and www.retailer.com/shirts?colour=purple will present blue shirts and purple shirts, respectively. Whereas customers could understand the pages in a different way primarily based on their preferences, search engines like google would possibly interpret them as equivalent.

Filter choices can create a ton of mixtures, particularly when you could have a number of selections. It’s because the parameters may be rearranged. In consequence, the next two URLs would find yourself exhibiting the very same content material:

- www.retailer.com/shirts?colour=blue&kind=price-asc

- www.retailer.com/shirts?kind=price-asc&colour=blue

To forestall duplicate content material points in web optimization and enhance the authority of filtered pages, use canonical URLs for every main, unfiltered web page. Observe, nonetheless, that this received’t repair crawl finances points. Alternatively, you may block these parameters in your robots.txt file to forestall search engines like google from crawling filtered variations of your pages.

2. Monitoring parameters: Websites typically add parameters like “utm_source” to trace the supply of the site visitors, or “utm_campaign” to determine a selected marketing campaign or promotion, amongst many different parameters. Whereas these URLs could look distinctive, the content material on the web page will stay equivalent to that present in URLs with out these parameters. For instance, www.instance.com/providers and www.instance.com/providers?utm_source=twitter.

All URLs with monitoring parameters ought to be canonicalized to the principle model (with out these parameters).

Search outcomes

Many web sites have search bins that permit customers filter and type content material throughout the positioning. However typically, when performing a web site search, you could come throughout content material on the search outcomes web page that may be very comparable or practically equivalent to a different web page’s content material.

For instance, should you seek for “content material” on our weblog (https://seranking.com/weblog/?s=content material), the content material that seems is nearly equivalent to the content material on our class web page (https://seranking.com/weblog/category-content/).

It’s because the search performance tries to supply related outcomes primarily based on the person’s question. If the search time period matches a class precisely, the search outcomes could embrace pages from that class. This will trigger duplicate or near-duplicate content material points.

To repair duplicate content material, use noindex tags or block all URLs that include search parameters in your robots.txt file. These actions inform search engines like google “Hey, skip over my search outcome pages, they’re simply duplicates.” Web sites also needs to keep away from linking to those pages. And since search engines like google attempt to crawl hyperlinks, eradicating undesirable hyperlinks prevents them from crawling the duplicate pages.

Localized web site variations

Some web sites have country-specific domains with the identical or comparable content material. For instance, you might need localized content material for international locations just like the US and UK. For the reason that content material for every locale is analogous with solely slight variations, the variations may be seen as duplicates.

It is a clear sign that you need to arrange hreflang tags. These tags assist Google perceive that the pages are localized variations of the identical content material.

Additionally, the prospect of encountering duplicates remains to be excessive even should you use subdomains or folders (as a substitute of domains) in your multi-regional variations. This makes it equally essential to make use of hreflang for each choices.

Non-www vs. www

Web sites are typically out there at two totally different variations: instance.com and www.instance.com. Though they result in the identical web site, search engines like google see these as distinct URLs. In consequence, pages from each the www and non-www variations get listed as duplicates. This duplication splits the hyperlink and site visitors worth as a substitute of specializing in a most well-liked model. It additionally results in repetitive content material in search indexes.

To handle this, websites ought to use a 301 redirect from one hostname to the opposite. This implies both redirecting the non-www to the www model or vice versa, relying on which model is most well-liked.

URLs with trailing slashes

Net URLs can typically embrace a trailing slash on the finish:

And typically the slash is omitted:

These are handled as separate URLs by search engines like google, even when they result in the identical web page. So, if each variations are crawled and listed, the content material finally ends up being duplicated throughout two distinct URLs.

The very best observe is to select one URL format (with or with out trailing slashes), and use it constantly throughout all web site URLs. Configure your internet server and hyperlinks to make use of the chosen format. Then, use 301 redirects to consolidate all relevance indicators onto the chosen URL fashion.

Pagination

Many web sites break up lengthy lists of content material (i.e. articles or merchandise) throughout numbered pagination pages, resembling:

- /articles/?web page=2

- /articles/web page/2

It’s necessary to make sure that pagination isn’t accessible by way of various kinds of URLs, resembling /?web page=2 and /web page/2, however solely by way of considered one of them (in any other case, they are going to be thought-about duplicates). It’s additionally a typical mistake to determine paginated pages as duplicates, as Google doesn’t view them as such.

Tag and class pages

Web sites could typically show merchandise on each tag and class pages to prepare content material by matter.

For instance:

- instance.com/class/shirts/

- instance.com/tag/blue-shirts/

If the class web page and the tag web page show an identical checklist of t-shirts, then the identical content material is duplicated throughout each the tag and class pages.

Tags usually supply minimal to no worth in your web site, so it’s finest to keep away from utilizing them. As an alternative, you may add filters or sorting choices, however watch out as they will additionally trigger duplicates, as talked about above. One other answer is to make use of noindex tags in your pages, however understand that Google will nonetheless crawl them.

Indexable staging/testing environments

Many web sites use separate staging or testing environments to check new code modifications earlier than deploying them for manufacturing. Staging websites typically include content material that’s equivalent or similar to the content material featured on the reside web site model.

But when these check URLs are publicly accessible and get crawled, search engines like google will index the content material from each environments. This will trigger the reside web site to compete in opposition to itself through the staging copy.

For instance:

- www.web site.com

- check.web site.com

So, if the staging web site has already been listed, take away it from the index first. The quickest possibility is to make a site-removal request by way of the Search Console. However, it’s potential to make use of HTTP authentication. It will trigger Googlebot to obtain a 401 code, stopping it from indexing these pages (Google doesn’t index 4XX URLs).

Different non-technical causes for duplicate content material

Duplicate content material in web optimization isn’t just brought on by technical points. There are additionally a number of non-technical components that may result in duplicate content material and different SEO points.

For instance, different web site homeowners could intentionally copy distinctive content material from websites that rank excessive in search engines like google in an try to learn from present rating indicators. As talked about earlier, scraping or republishing content material with out permission creates unauthorized duplicate variations that compete with the unique content material.

Websites might also publish visitor posts or content material written by freelancers that hasn’t but been correctly screened for its uniqueness rating. If the author reuses or repurposes present content material, the positioning could unintentionally publish duplicate variations of articles or info already out there on-line elsewhere.

The outcomes are usually fairly dangerous and surprising in each circumstances.

Fortunately, the answer for each is straightforward. Right here’s the right way to strategy it:

- Earlier than posting visitor articles or outsourced content material, use plagiarism checkers to ensure they’re fully unique and never copied.

- Monitor your content material for any unauthorized copying or scraping by different websites.

- Set protecting measures with companions and associates to make sure your content material isn’t over-republished.

- Put a DMCA badge in your web site. If somebody copies your content material whilst you have the badge, the DMCA would require them to take it down without cost. The DMCA additionally supplies instruments that can assist you discover your plagiarized content material on different web sites. They’ll shortly take away any copied textual content, pictures, or movies.

Learn how to keep away from duplicate content material

When creating an internet site, ensure you have applicable procedures in place to forestall duplicate content material from showing on (or in relation to) your web site.

For instance, you may forestall pointless URLs from being crawled with the assistance of the robots.txt file. Observe, nonetheless, that you need to all the time test it (i.e with our free Robots.txt Tester). It will forestall robots.txt from closing off necessary pages to go looking crawlers.

Additionally, you need to shut off pointless pages from being listed with the assistance of <meta identify=”robots” content material=”noindex”> or the X-Robots-Tag: noindex within the server response. These are the best and commonest methods to keep away from issues with duplicate web page indexing.

Essential! If search engines like google have already seen the duplicate pages, and also you’re utilizing the canonical tag (or the noindex directive) to repair duplicate content material issues, wait till search robots recrawl these pages. Solely then do you have to block them within the robots.txt file. In any other case, the crawler received’t see the canonical tag or the noindex directive.

Eliminating duplicates is non-negotiable

The implications of duplicate content material in web optimization are many. They will trigger critical hurt to web sites, so that you shouldn’t underestimate their affect. By understanding the place the issue comes from, you may simply management your internet pages and keep away from duplicates. For those who do have duplicate pages, it’s essential to take well timed motion. Performing web site audits, setting goal URLs for key phrases, and doing common rating checks helps you notice the difficulty as quickly because it occurs.

Have you ever ever struggled with duplicate pages? How have you ever managed the scenario? Share your expertise with us within the feedback part under!

[ad_2]

Source_link