SPA Search engine optimisation: Methods and Finest Practices

[ad_1]

Traditionally, internet builders have been utilizing HTML for content material, CSS for styling, and JavaScript (JS) for interactivity components. JS permits the addition of options like pop-up dialog bins and expandable content material on internet pages. These days, over 98% of all websites use JavaScript because of its capability to change internet content material based mostly on person actions.

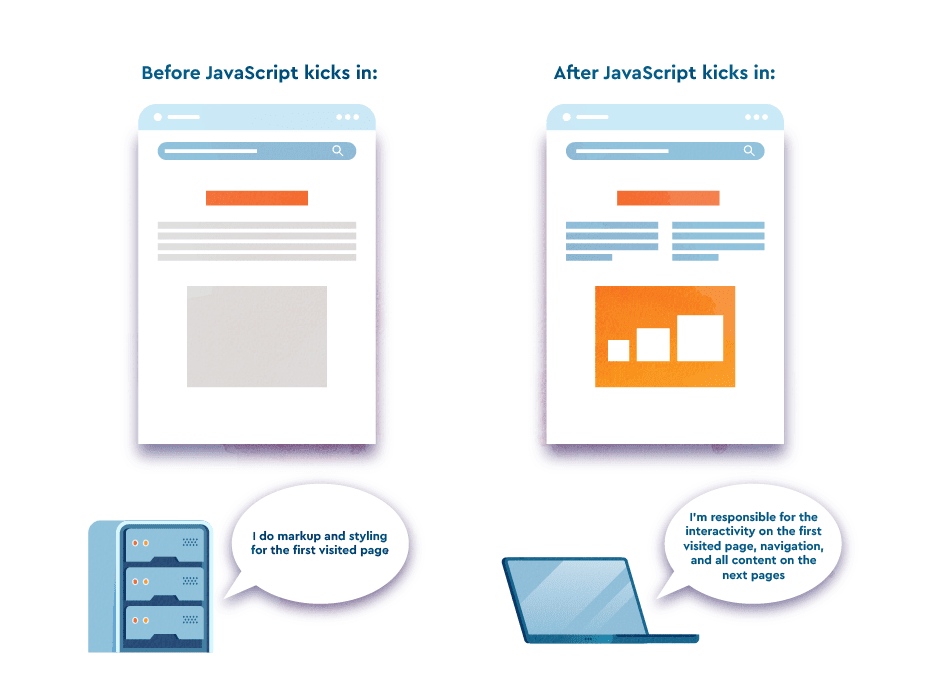

A comparatively new development of incorporating JS into web sites is the adoption of single-page functions. In contrast to conventional web sites that load all their assets (HTML, CSS, JS) by requesting every from the server every time it’s wanted, SPAs solely require an preliminary loading and don’t proceed to burden the server. As a substitute, the browser handles all of the processing.

This results in sooner web sites, which is sweet as a result of research present that internet buyers anticipate web sites to load inside three seconds. The longer the load takes, the less clients will keep on the location. Adopting the SPA method generally is a good answer to this drawback, but it surely may also be a catastrophe for Search engine optimisation if carried out incorrectly.

On this submit, we’ll talk about how SPAs are made, look at the challenges they current for optimization, and supply steerage on the right way to do SPA Search engine optimisation correctly. Get SPA Search engine optimisation proper and search engines like google will have the ability to perceive your SPAs and rank them nicely.

SPA in a nutshell

A single-page utility, or SPA, is a selected JavaScript-based expertise for web site growth that doesn’t require additional web page masses after the primary web page view load. React, Angular, and Vue are the preferred JavaScript frameworks used for constructing SPA. They principally differ when it comes to supported libraries and APIs, however each serve quick client-side rendering.

An SPA enormously enhances website pace by eliminating the requests between the server and browser. However search engines like google should not so thrilled about this JavaScript trick. The problem lies in the truth that search engines like google don’t work together with the location like customers do, leading to an absence of accessible content material. Serps don’t perceive that the content material is being added dynamically, leaving them with a clean web page but to be crammed.

Finish customers profit from SPA expertise as a result of they will simply navigate by means of internet pages with out having to take care of further web page masses and format shifts. Provided that single web page functions cache all of the assets in an area storage (after they’re loaded on the preliminary request), customers can proceed shopping them even with an unstable connection. Regardless of the additional Search engine optimisation effort it calls for, the expertise’s advantages guarantee its enduring presence.

Examples of SPAs

Many high-profile web sites are constructed with single-page utility structure. Examples of in style ones embody:

Google Maps permits customers to view maps and discover instructions. When a person initially visits the location, a single web page is loaded, and additional interactions are dealt with dynamically by means of JavaScrip. The person can pan and zoom the map, and the appliance will replace the map view with out reloading the web page.

Airbnb is a well-liked journey reserving website that makes use of a single web page design that dynamically updates as customers seek for lodging. Customers can filter search outcomes and discover numerous property particulars with out the necessity to navigate to new pages.

When customers log in to Fb, they’re introduced with a single web page that enables them to work together with posts, pictures, and feedback, eliminating the necessity to refresh the web page.

Trello is a web-based mission administration software powered by SPA. On a single web page, you’ll be able to create, handle, and collaborate on tasks, playing cards, and lists.

Spotify is a well-liked music streaming service. It allows you to browse, search, and take heed to music on a single web page. No want for reloading or switching between pages.

Is a single-page utility good for Search engine optimisation?

Sure, for those who implement it correctly.

SPAs present a seamless and intuitive person expertise. They don’t require the browser to reload the complete web page when navigating between totally different sections. Customers can take pleasure in a quick shopping expertise. Customers are additionally much less prone to be distracted or pissed off by web page reloads or interruptions within the shopping expertise. So their engagement could be larger.

The SPA method can be in style amongst internet builders because it supplies high-speed operation and fast growth. Builders can apply this expertise to create totally different platform variations based mostly on ready-made code. This hastens the desktop and cell utility growth course of, making it extra environment friendly.

Whereas SPAs can supply quite a few advantages for customers and builders, additionally they current a number of challenges for Search engine optimisation. As search engines like google historically depend on HTML content material to crawl and index web sites, it may be difficult for them to entry and index the content material on SPAs that rely closely on JavaScript. This may end up in crawlability and indexability points.

This method tends to be a very good one for each customers and Search engine optimisation, however you will need to take the best steps to make sure your pages are straightforward to crawl and index. With the right single web page app optimization, your SPA web site could be simply as Search engine optimisation-friendly as any conventional web site.

Within the following sections, we’ll go over the right way to optimize SPAs.

Why it’s onerous to optimize SPAs

Earlier than JS grew to become dominant in internet growth, search engines like google solely crawled and listed text-based content material from HTML. As JS was changing into increasingly more in style, Google acknowledged the necessity to interpret JS assets and perceive pages that depend on them. Google’s search crawlers have made important enhancements over time, however there are nonetheless a whole lot of points that persist relating to how they understand and entry content material on single web page functions.

Whereas there may be little data accessible on how different search engines like google understand single-page functions, it’s secure to say that each one of them should not loopy about Javascript-dependent web sites. In the event you’re concentrating on search platforms past Google, you’re in fairly a pickle. A 2017 Moz experiment revealed that solely Google and surprisingly, Ask, have been in a position to crawl JavaScript content material, whereas all different search engines like google remained completely blind to JS.

At the moment, no search engine, other than Google, has made any noteworthy bulletins relating to efforts to raised perceive JS and single-page utility web sites. However some official suggestions do exist. For instance, Bing makes the identical ideas as Google, selling server-side pre-rendering—a expertise that enables bingbot (and different crawlers) to entry static HTML as probably the most full and understandable model of the web site.

Crawling points

HTML, which is definitely crawlable by search engines like google, doesn’t include a lot data on an SPA. All it comprises is an exterior JavaScript file and the useful <script> src attribute. The browser runs the script from this file, after which the content material is dynamically loaded, except the crawler fails to carry out the identical operation. When that occurs, it sees an empty web page.

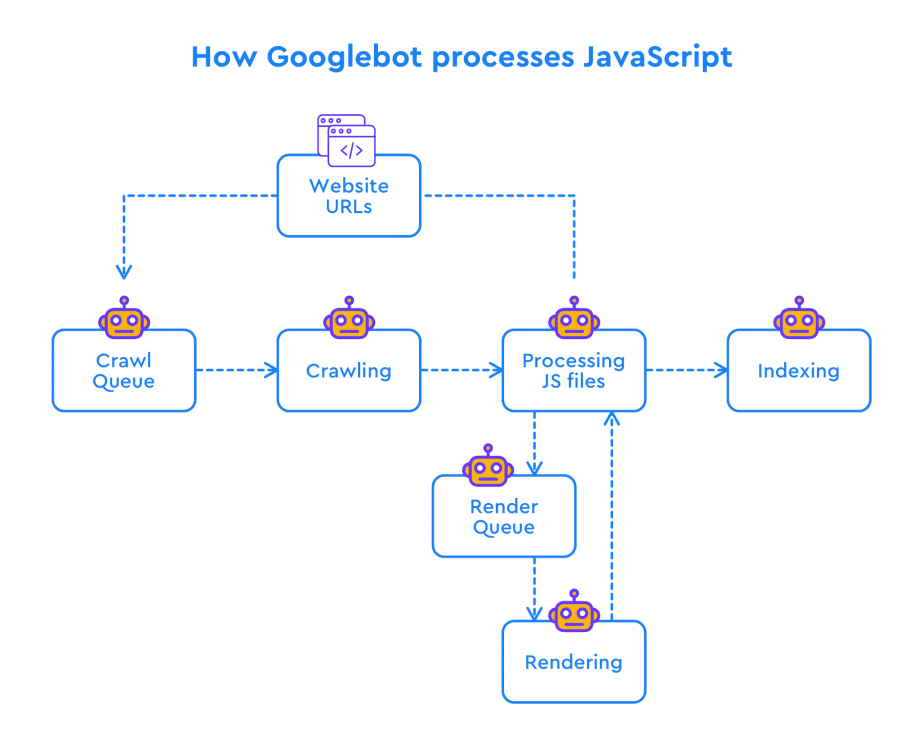

Again in 2014, Google introduced that whereas they have been bettering its performance to raised perceive JS pages, additionally they admitted that there have been tons of blockers stopping them from indexing JS-rich web sites. Throughout the Google I/O ‘18 sequence, Google analysts mentioned the idea of two waves of indexing for JavaScript-based websites. Which means Googlebot re-renders the content material when it has the required assets. As a result of elevated processing energy and reminiscence required for JavaScript, the cycle of crawling, rendering, and indexing isn’t instantaneous.

Happily, in 2019, Google mentioned that they aimed for a median time of 5 seconds for JS-based internet pages to go from crawler to renderer. Simply as site owners have been changing into accustomed to the 2 waves of indexing method, Google’s Martin Splitt mentioned in 2020 that the scenario was “extra sophisticated” and that the earlier idea now not held true.

Google continued to improve its Googlebot utilizing the newest internet applied sciences, bettering its capability to crawl and index web sites. As a part of these efforts, Google has launched the idea of an evergreen Googlebot, which operates on the newest Chromium rendering engine (at present model 114).

With Googlebot’s evergreen standing, it positive factors entry to quite a few new options which might be accessible to fashionable browsers. This empowers Googlebot to extra precisely render and perceive the content material and construction of contemporary web sites, together with single-page functions. This leads to web site content material that may be crawled and listed higher.

The key factor to grasp right here is that there’s a delay in how Google processes JavaScript on internet pages, and all JS content material that’s loaded on the shopper facet won’t be seen as full, not to mention correctly listed. Serps might uncover the web page however received’t have the ability to decide whether or not the copy on that web page is of high-quality or if it corresponds to the search intent.

Issues with error 404

With an SPA, you additionally lose the normal logic behind the 404 error web page and plenty of different non-200 server standing codes. As a result of nature of SPAs, the place every little thing is rendered by the browser, the online server tends to return a 200 HTTP standing code to each request. In consequence, search engines like google face issue in distinguishing pages that aren’t legitimate for indexing.

URL and routing

Whereas SPAs present an optimized person expertise, it may be troublesome to create a very good Search engine optimisation technique round them because of their complicated URL construction and routing. In contrast to conventional web sites, which have distinct URLs for every web page, SPAs sometimes have just one URL for the complete utility and depend on JavaScript to dynamically replace the content material on the web page.

Builders should rigorously handle the URLs, making them intuitive and descriptive, guaranteeing that they precisely mirror the content material displayed on the web page.

To handle these challenges, you should use server-side rendering and pre-rendering. This creates static variations of the SPA. An alternative choice is to make use of the Historical past API or pushState() methodology. This methodology permits builders to fetch assets asynchronously and replace URLs with out utilizing fragment identifiers. By combining it with the Historical past API, you’ll be able to create URLs that precisely mirror the content material displayed on the web page.

Monitoring points

One other concern that arises with SPA Search engine optimisation is expounded to Google Analytics monitoring. For conventional web sites, the analytics code is run each time a person masses or reloads a web page, precisely counting every view. However when customers navigate by means of totally different pages on a single-page utility, the code is just run as soon as, failing to set off particular person pageviews.

The character of dynamic content material loading prevents GA from getting a server response for every pageview. That is why commonplace studies in GA4 don’t supply the required analytics on this situation. Nonetheless, you’ll be able to overcome this limitation by leveraging GA4’s Enhanced measurement and configuring Google Tag Supervisor accordingly.

Pagination also can pose challenges for SPA Search engine optimisation, as search engines like google might have issue crawling and indexing dynamically loaded paginated content material. Fortunately, there are some strategies that you should use to trace person exercise on a single-page utility web site

We’ll cowl these strategies later. For now, keep in mind that they require extra effort.

The way to do SPA Search engine optimisation

To make sure that your SPA is optimized for each search engines like google and customers, you’ll have to take a strategic method to on-page optimization. Right here’s your full on-page Search engine optimisation information, totally covers probably the most compelling methods for on-site optimization.

Additionally, think about using instruments that may make it easier to with this course of, similar to SE Rating’s On-Web page Search engine optimisation Checker Software. With this software, you’ll be able to optimize your web page content material on your goal key phrases, your web page title and outline, and different components.

Now, let’s take a detailed take a look at the very best practices of Search engine optimisation for SPA.

Server-side rendering

Server-side rendering (SSR) includes rendering a web site on the server and sending it to the browser. This method permits search bots to crawl all web site content material based mostly on JavaScript-based components. Whereas it is a life saver when it comes to crawling and indexing, it would decelerate the load. One noteworthy side of SSR is that’s diverges from the pure method taken by SPAs. SPAs rely totally on client-side rendering, which contributes to their quick and interactive nature, offering a seamless person expertise. It additionally simplifies the deployment course of.

Isomorphic JS

One attainable rendering answer for a single-page utility is isomorphic, or “common” JavaScript. Isomorphic JS performs a significant function in producing pages on the server facet, assuaging the necessity for a search crawler to execute and render JS information.

The “magic” of isomorphic JavaScript functions lies of their capability to run on each the server and shopper facet. It really works by letting customers work together with the web site as if its content material was rendered by the browser when the truth is, the person was truly utilizing the HTML file generated on the server facet. There are frameworks that facilitate isomorphic app growth for every in style SPA framework. Let’s use Subsequent.js and Gatsby for React as examples of this The previous generates HTML for every request, whereas the latter generates a static web site and shops HTML within the cloud. Equally, Nuxt.js for Vue renders JS into HTML on the server and sends the info to the browser.

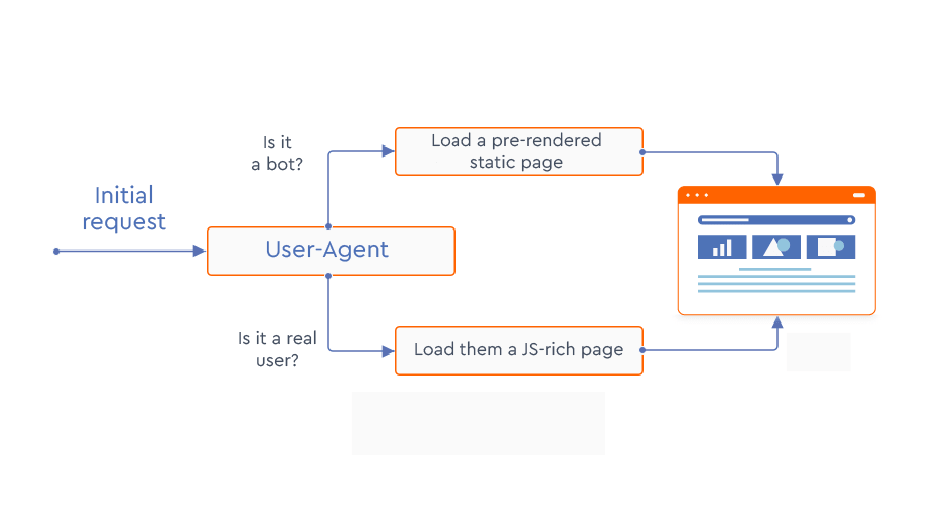

Pre-rendering

One other go-to answer for single web page functions is pre-rendering. This includes loading all HTML components and storing them in server cache, which may then be served to go looking crawlers. A number of companies, like Prerender and BromBone, intercept requests made to a web site and present totally different web page variations of pages to go looking bots and actual customers. The cached HTML is proven to the search bots, whereas the “regular” JS-rich content material is proven to actual customers. Web sites with fewer than 250 pages can use Prerender without spending a dime, whereas larger ones need to pay a month-to-month charge ranging from $200. It’s an easy answer: you add the sitemap file and it does the remainder. BromBone doesn’t even require a handbook sitemap add and prices $129 per thirty days.

There are different, extra time-consuming strategies for serving a static HTML to crawlers. One instance is utilizing Headless Chrome and the Puppeteer library, which can convert routes to pages into the hierarchical tree of HTML information. However needless to say you’ll have to take away bootstrap code and edit your server configuration file to find the static HTML meant for search bots.

Progressive enhancement with function detection

Utilizing the function detection methodology is one in all Google’s strongest suggestions for SPAs. This method includes progressively enhancing the expertise with totally different code assets. It really works through the use of a easy HTML web page as the muse that’s accessible to each crawlers and customers. On prime of this web page, extra options similar to CSS and JS are added and enabled or disabled in line with browser assist.

To implement function detection, you’ll want to put in writing separate chunks of code to examine if every of the required function APIs is appropriate with every browser. Happily, there are particular libraries like Modernizr that may make it easier to save time and simplify this course of.

Views as URLs to make them crawlable

When customers scroll by means of an SPA, they go separate web site sections. Technically, an SPA comprises just one web page (a single index.html file) however guests really feel like they’re shopping a number of pages. When customers transfer by means of totally different elements of a single-page utility web site, the URL modifications solely in its hash half (for instance, http://web site.com/#/about, http://web site.com/#/contact). The JS file instructs browsers to load sure content material based mostly on fragment identifiers (hash modifications).

To assist search engines like google understand totally different sections of a web site as totally different pages, it is advisable to use distinct URLs with the assistance of the Historical past API. It is a standardized methodology in HTML5 for manipulating the browser historical past. Google Codelabs suggests utilizing this API as an alternative of hash-based routing to assist search engines like google acknowledge and deal with totally different fragments of content material triggered by hash modifications as separate pages. The Historical past API means that you can change navigation hyperlinks and use paths as an alternative of hashes.

Google analyst Martin Splitt offers the identical recommendation—to deal with views as URLs through the use of the historical past API. He additionally suggests including hyperlink markup with href attributes, and creating distinctive titles and outline tags for every view (with “a bit further JavaScript”).

Observe that markup is legitimate for any hyperlinks in your web site. So to make hyperlinks in your web site crawlable by search engines like google, you must use the <a> tag with an href attribute as an alternative of counting on the onclick motion. It is because JavaScript onclick can’t be crawled and is just about invisible to Google.

So, the first rule is to make hyperlinks crawlable. Make certain your hyperlinks observe Google requirements for SPA Search engine optimisation and that they seem as follows:

<a href="https://yoursite.com"> <a href="https://seranking.com/companies/class/Search engine optimisation">

Google might attempt to parse hyperlinks formatted in a different way, however there’s no assure it’ll achieve this or succeed. So keep away from hyperlinks that seem within the following method:

<a routerLink="companies/class">

<span href="https://yoursite.com">

<a onclick="goto('https://yoursite.com')">

You need to start by including hyperlinks utilizing the <a> HTML component, which Google understands as a basic hyperlink format. Subsequent, make it possible for the URL included is a legitimate and functioning internet deal with, and that it follows the principles of a Uniform Useful resource Identifier (URI) commonplace. In any other case, crawlers received’t have the ability to correctly index and perceive the web site’s content material.

Views for error pages

With single-page web sites, the server has nothing to do with error dealing with and can all the time return the 200 standing code, which signifies (incorrectly on this case) that every little thing is okay. However customers might typically use the improper URL to entry an SPA, so there needs to be some approach to deal with error responses. Google recommends creating separate views for every error code (404, 500, and so on.) and tweaking the JS file in order that it directs browsers to the respective view.

Titles & descriptions for views

Titles and meta descriptions are important components for on-page Search engine optimisation. A well-crafted meta title and outline can each enhance the web site’s visibility in SERPs and improve its click-through fee.

Within the case of SPA Search engine optimisation, managing these meta tags could be difficult as a result of there’s just one HTML file and URL for the complete web site. On the similar time, duplicate titles and descriptions are probably the most frequent Search engine optimisation points.

The way to repair this concern?

Deal with views, that are the HTML fragments in SPAs that customers understand as screens or ‘pages’. It’s essential to create distinctive views for every part of your single-page web site. It’s additionally vital to dynamically replace titles and descriptions to mirror the content material being displayed inside every view.

To set or change the meta description and <title> component in an SPA, builders can use JavaScript.

Utilizing robots meta tags

Robots meta tags present directions to search engines like google on the right way to crawl and index a web site’s pages. When carried out appropriately, they will be sure that search engines like google crawl and index an important elements of the web site, whereas avoiding duplicate content material or incorrect web page indexing.

For instance, utilizing a “nofollow” directive can forestall search engines like google from following hyperlinks inside a sure view, whereas a “noindex” directive within the robots meta tag can exclude sure views or sections of the SPA from be listed.

<meta title="robots" content material="noindex, nofollow">

You may also use JavaScript so as to add a robots meta tag, but when a web page has a noindex tag in its robots meta tag, Google received’t render or execute JavaScript on that web page. On this case, your makes an attempt to vary or take away the noindex tag utilizing JavaScript received’t be efficient as a result of Google won’t ever even see that code.

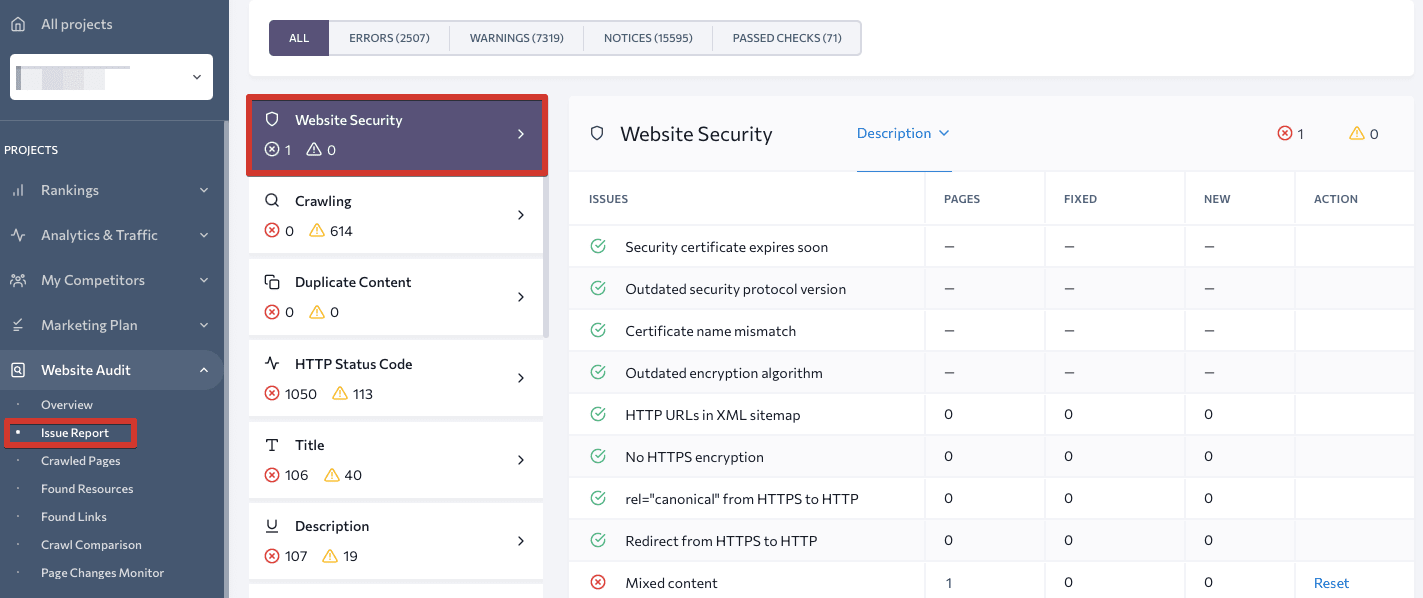

To examine robots meta tags points in your SPA, run a single web page app audit with SE Rating. The audit software will analyze your web site and detect any technical points which might be blocking your web site from reaching the highest of the SERP.

Keep away from mushy 404 errors

A mushy 404 error happens when a web site returns a standing code of 200 (OK) as an alternative of the suitable 404 (Not Discovered) for a web page that doesn’t exist. The server incorrectly telling search engines like google that the web page exists.

Gentle 404 errors could be notably problematic for SPA web sites due to the way in which they’re constructed and the expertise they use. Since SPAs rely closely on JavaScript to dynamically load content material, the server might not all the time have the ability to precisely determine whether or not a requested web page exists or not. Due to client-side routing, which is usually utilized in client-side rendered SPAs, it’s usually not possible to make use of significant HTTP standing codes.

You possibly can keep away from mushy 404 errors by making use of one of many following methods:

- Use a JavaScript redirect to a URL that triggers a 404 HTTP standing code from the server.

- Add a noindex tag to error pages by means of JavaScript.

Lazily loaded content material

Lazy loading refers back to the observe of loading content material, similar to photographs or movies, solely when they’re wanted, sometimes as a person scrolls down the web page. This method can enhance web page pace and expertise, particularly for SPAs the place giant quantities of content material could be loaded without delay. But when utilized incorrectly, you’ll be able to unintentionally cover content material from Google.

To make sure that Google indexes and sees all of the content material in your web page, it’s important to take precautions. Ensure that all related content material is loaded each time it’s displayed within the viewport. You are able to do this by:

- Making use of native lazy-loading for photographs and iframes, carried out utilizing the “loading” attribute.

- Utilizing IntersectionObserver API that enables builders to see when a component enters or exits the viewport and a polyfill to make sure browser compatibility.

- Resorting to JavaScript library that gives a set of instruments and features that make it straightforward to load content material solely when it enters the viewport.

Whichever method you select, be sure that it really works appropriately. Use a Puppeteer script to run native exams, and use the URL inspection software in Google Search Console to see if all photographs have been loaded.

Social shares and structured knowledge

Social sharing optimization is usually neglected by web sites. Regardless of how insignificant it could look, implementing Twitter Playing cards and Fb’s Open Graph will permit for wealthy sharing throughout in style social media channels, which is sweet on your web site’s search visibility. In the event you don’t use these protocols, sharing your hyperlink will set off the preview show of a random, and typically irrelevant, visible object.

Utilizing structured knowledge can be extraordinarily helpful for making various kinds of web site content material readable to crawlers. Schema.org supplies choices for labeling knowledge varieties like movies, recipes, merchandise, and so forth.

You may also use JavaScript to generate the required structured knowledge on your SPA within the type of JSON-LD and inject it into the web page. JSON-LD is a light-weight knowledge format that’s straightforward to generate and parse.

You possibly can conduct a Wealthy Outcomes Check on Google to find any at present assigned knowledge varieties and to allow wealthy search outcomes on your internet pages.

Testing an SPA for Search engine optimisation

There are a number of methods to check your SPA web site’s Search engine optimisation. You should utilize instruments like Google Search Console or Cell-Pleasant Assessments. You may also examine your Google cache or examine your content material in search outcomes. We’ve outlined the right way to use every of them under.

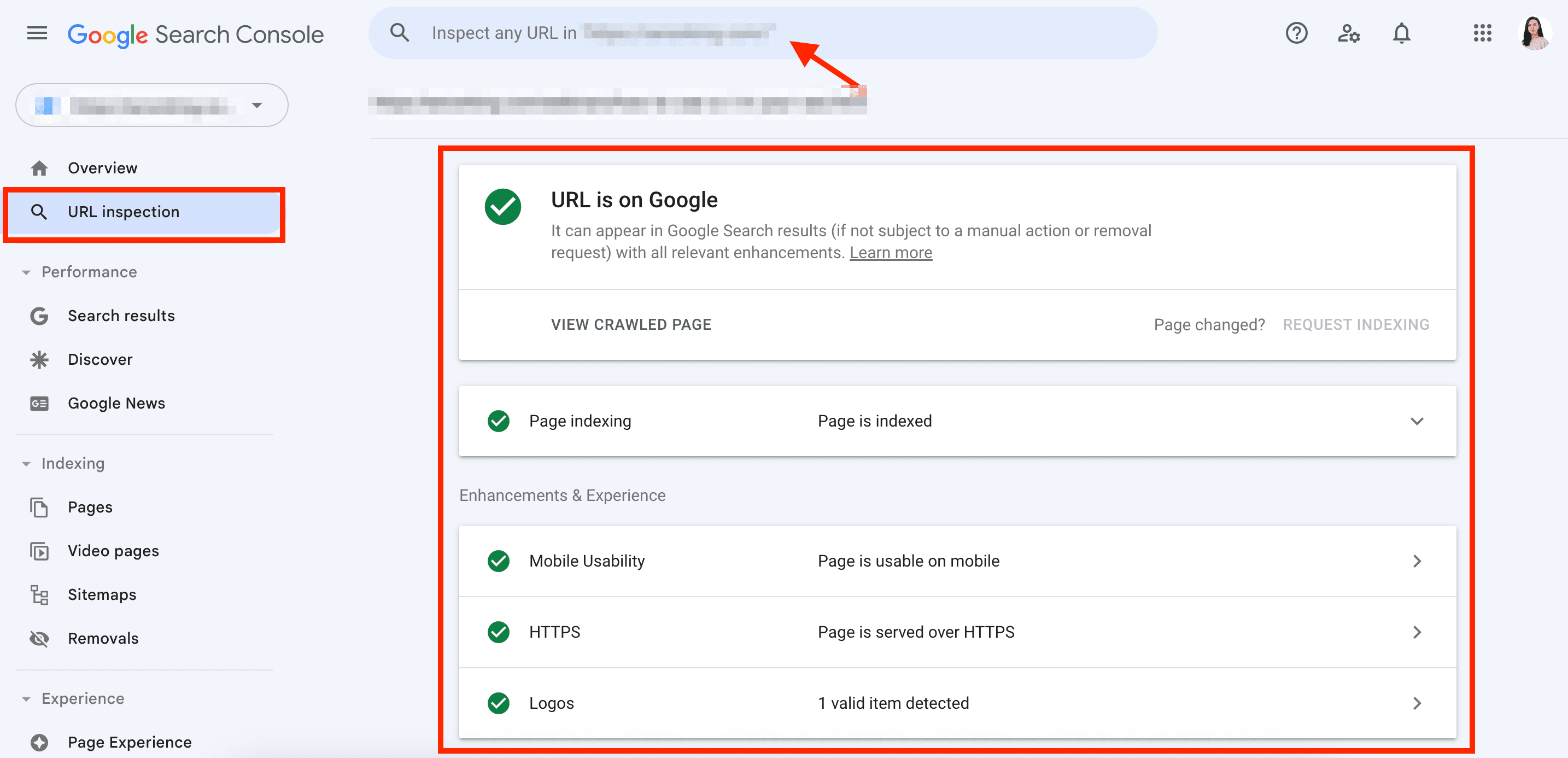

URL inspection in Google Search Console

You possibly can entry the important crawling and indexing data within the URL Inspection part of Google Search Console. It doesn’t give a full preview of how Google sees your web page, but it surely does give you fundamental data, together with:

- Whether or not the search engine can crawl and index your web site

- The rendered HTML

- Web page assets that may’t be loaded and processed by search engines like google

Yow will discover out particulars associated to web page indexing, cell usability, HTTPS, and logos by opening the studies.

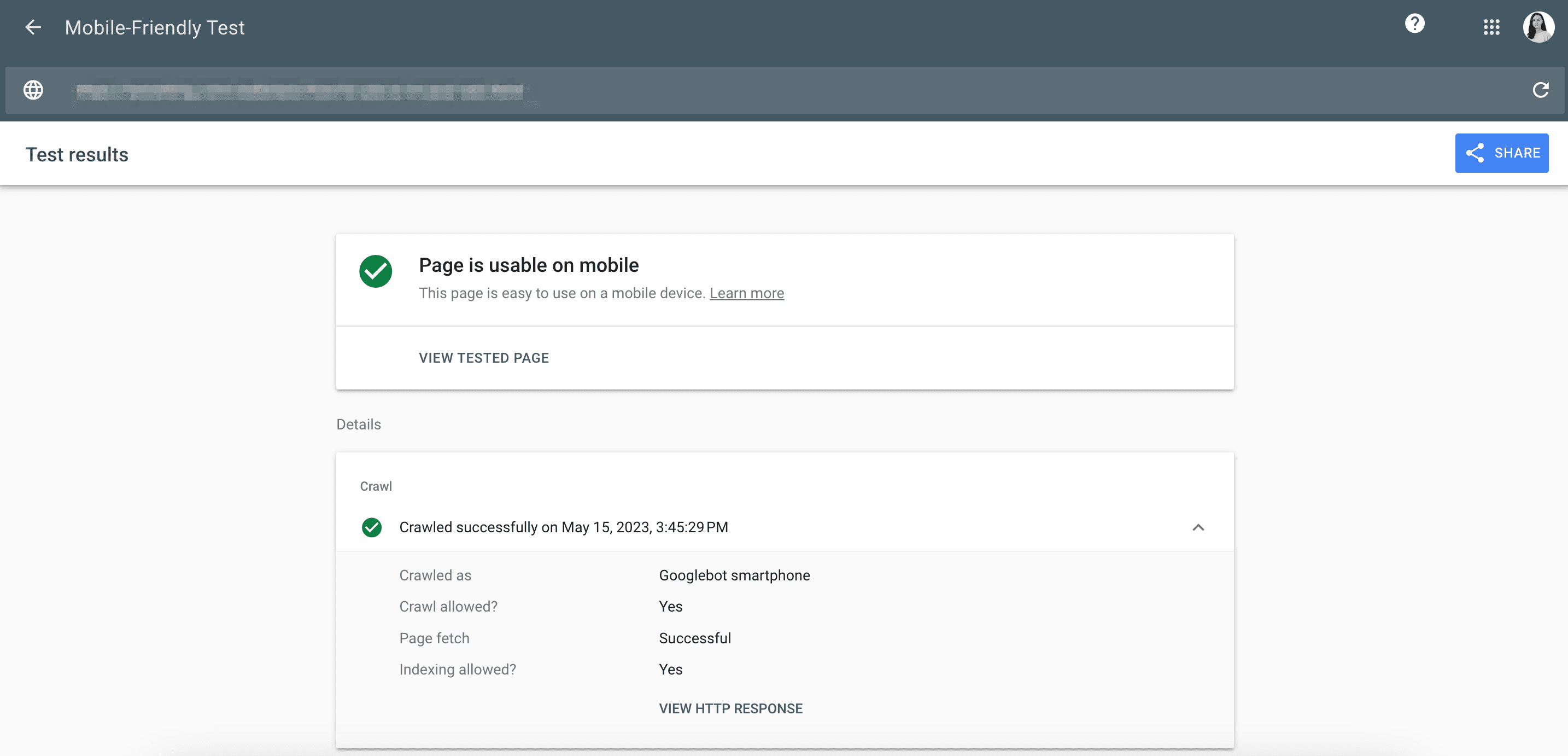

Google’s Cell-Pleasant Check

Google’s Cell-Pleasant Check reveals nearly the identical data as GSC. It additionally helps to examine whether or not the rendered web page is readable on cell screens.

Learn our information on cell Search engine optimisation to get professional recommendations on the right way to make your web site mobile-friendly.

Plus, Headless Chrome is a superb software for testing your SPA and observing how JS can be executed. In contrast to conventional browsers, a headless browser doesn’t have a full UI however supplies the identical surroundings that actual customers would expertise.

Lastly, use instruments like BrowserStack to check your SPA on totally different browsers.

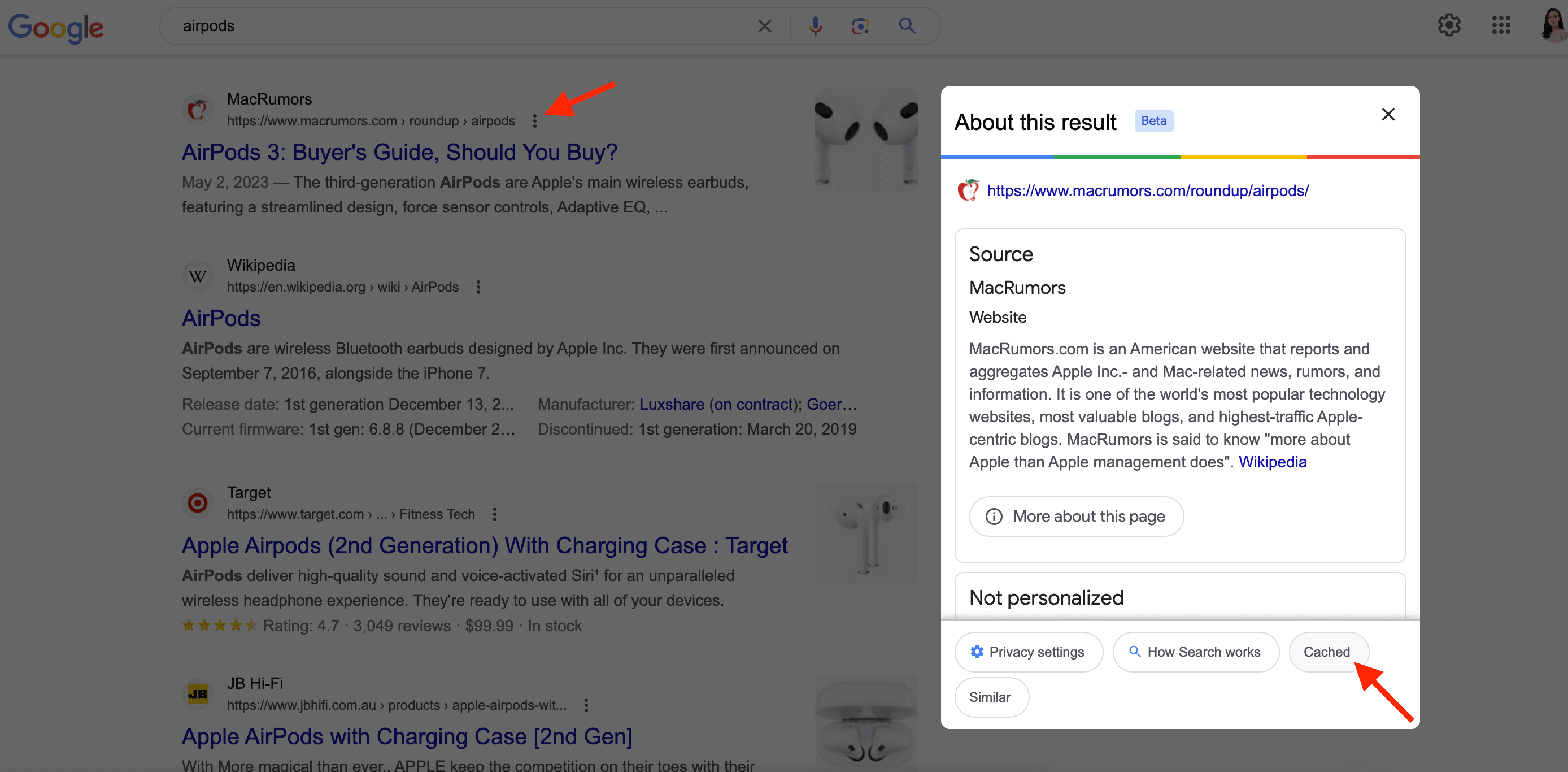

Test your Google cache

One other fundamental method for testing your SPA web site for Search engine optimisation is to examine the Google cache of your web site’s pages. Google cache is a snapshot of an internet web page taken by Google’s crawler at a selected cut-off date.

To open the cache:

- Seek for the web page on Google.

- Click on on the icon with three dots subsequent to the search consequence.

- Select the Cached possibility.

Alternatively, you should use the search operator “cache:”, and paste your URL after a colon (with out a area), or you may make the most of Google Cache Checker, which is our free and easy software for checking your cached model.

Observe! The cached model of your web page isn’t all the time probably the most up-to-date. Google updates its cache frequently, however there generally is a delay between when a web page is up to date and when the brand new model seems within the cache. If Google’s cached model of a web page is outdated, it could not precisely mirror the web page’s present content material and construction. That’s why it’s not a good suggestion to solely depend on Google cache for single web page utility Search engine optimisation testing and even for debugging functions.

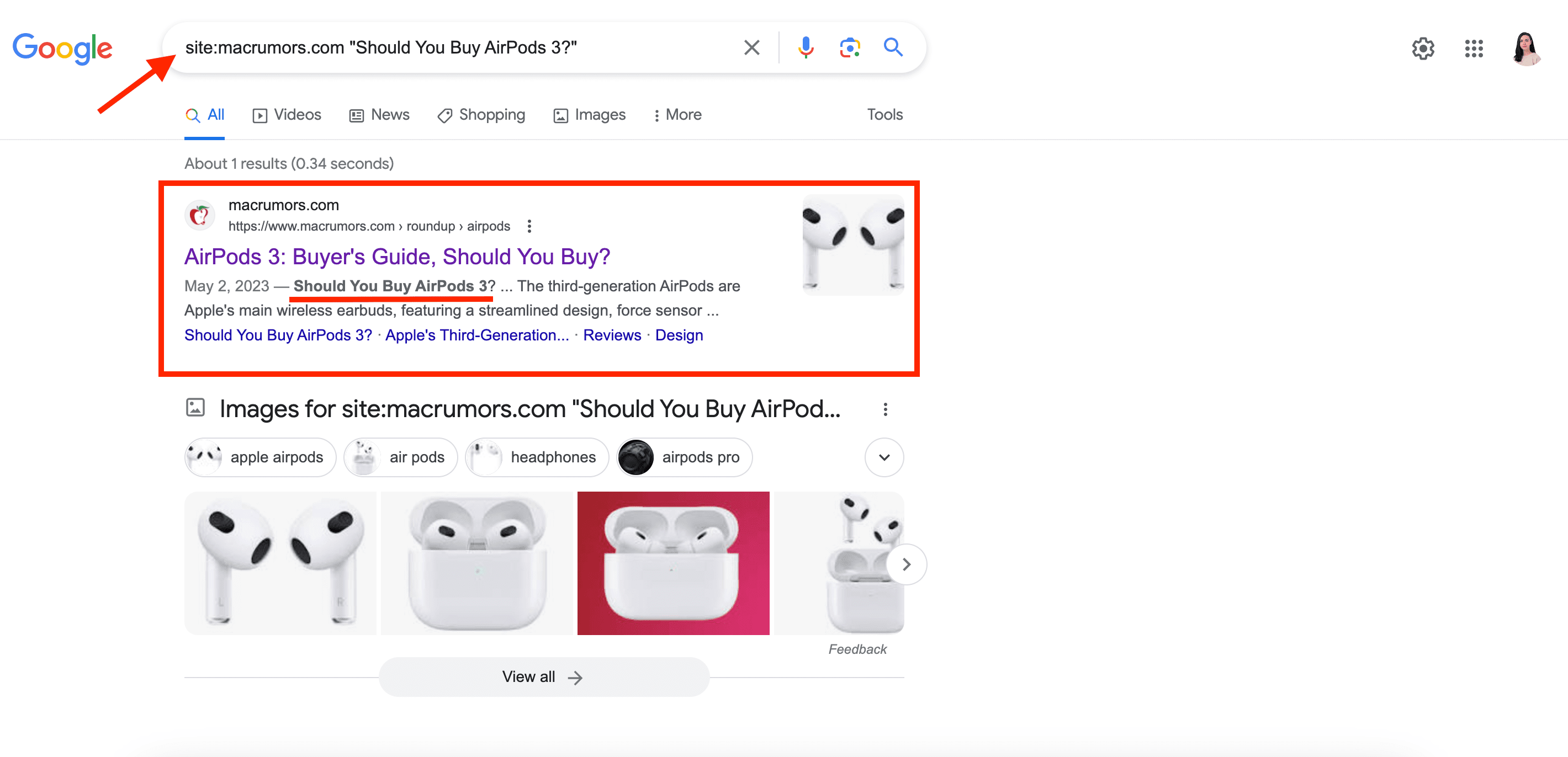

Test the content material within the SERP

There are a number of methods to examine how your SPA seems in SERPs:

- You possibly can examine direct quotes of your content material within the SERP to see whether or not the web page containing that textual content is listed.

- You should utilize the location: command to examine your URL within the SERP.

Lastly, you’ll be able to mix each. Enter website:area title “content material quote”, like within the screenshot under, and if the content material is crawled and listed, you’ll see it within the search outcomes.

There’s no method round fundamental Search engine optimisation

Apart from the particular nature of a single web page utility, most basic optimization recommendation applies to the sort of web site. Some fundamental Search engine optimisation methods to incorporate contain optimizing for:

- Safety. In the event you haven’t already, defend your web site with HTTPS. In any other case, search engines like google may solid apart your website and compromise its person knowledge if it’s utilizing any. By no means cross Web site safety off your to-do record, because it requires common monitoring. Test your SSL/TLS certificates for important errors frequently to ensure your web site could be safely accessed:

- Content material optimization. We’ve talked about particular measures for optimizing content material in SPA, similar to writing distinctive title tags and outline meta tags for every view, just like how you’ll for every web page on a multi-page web site. However it is advisable to have optimized content material earlier than taking the above measures. Your content material needs to be tailor-made to the best person intents, well-organized, visually interesting, and wealthy in useful data. In the event you haven’t collected a key phrase record for the location, will probably be difficult to ship the content material your guests want. Check out our information on key phrase analysis for brand new insights.

- Hyperlink constructing. Backlinks play a significant function in signaling Google concerning the degree of belief different assets have in your web site. Due to this, constructing a backlink profile is an important a part of your website’s Search engine optimisation. No two backlinks are alike, and every hyperlink pointing to your web site holds a distinct worth. Whereas some backlinks can considerably increase your rankings, spammy ones can harm your search presence. Take into account studying extra about backlink high quality and following greatest practices to strengthen your hyperlink profile.

- Competitor monitoring. You’ve more than likely already carried out analysis in your opponents through the early levels of your web site’s growth. Nonetheless, as with all Search engine optimisation and advertising duties, it is very important frequently monitor your area of interest. Because of data-rich instruments, you’ll be able to simply monitor rivals’ methods in natural and paid search. This lets you consider the market panorama, spot fluctuations amongst main opponents, and draw inspiration from profitable key phrases or campaigns that already work for related websites.

Monitoring single web page functions

Monitor SPA with GA4

Monitoring person habits on SPA web sites could be difficult, however GA4 has the instruments to deal with it. By utilizing GA4 for Search engine optimisation, you’ll have the ability to higher perceive how customers have interaction along with your web site, determine areas for enchancment, and make data-driven selections to enhance person expertise and in the end drive enterprise success.

In the event you nonetheless haven’t put in Google Analytics, learn the information on GA4 setup to learn the way to do it shortly and appropriately.

As soon as you’re able to proceed, observe the following steps:

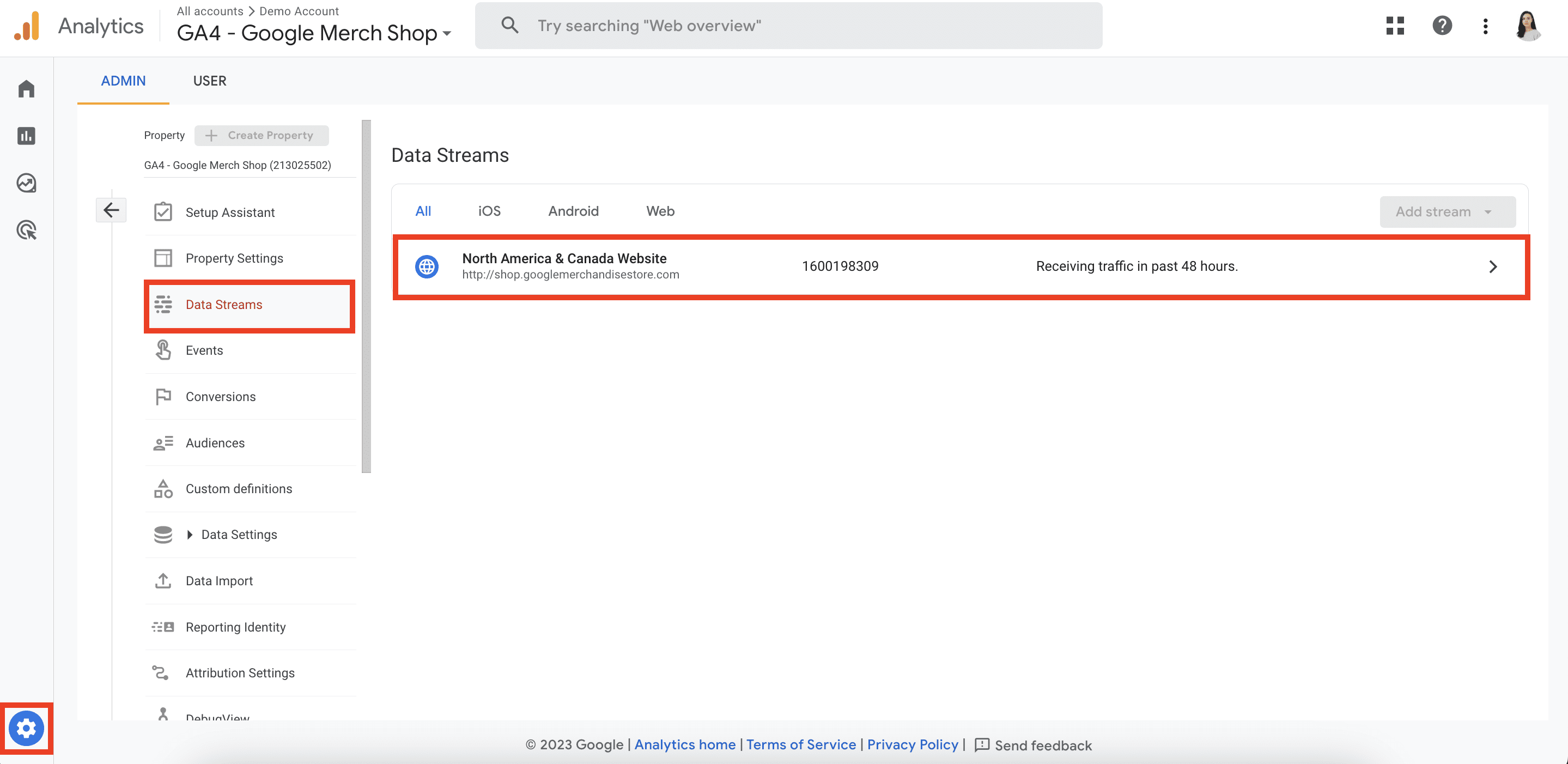

- Go to your GA4 account after which on Information Streams within the Admin part. Click on in your internet knowledge stream.

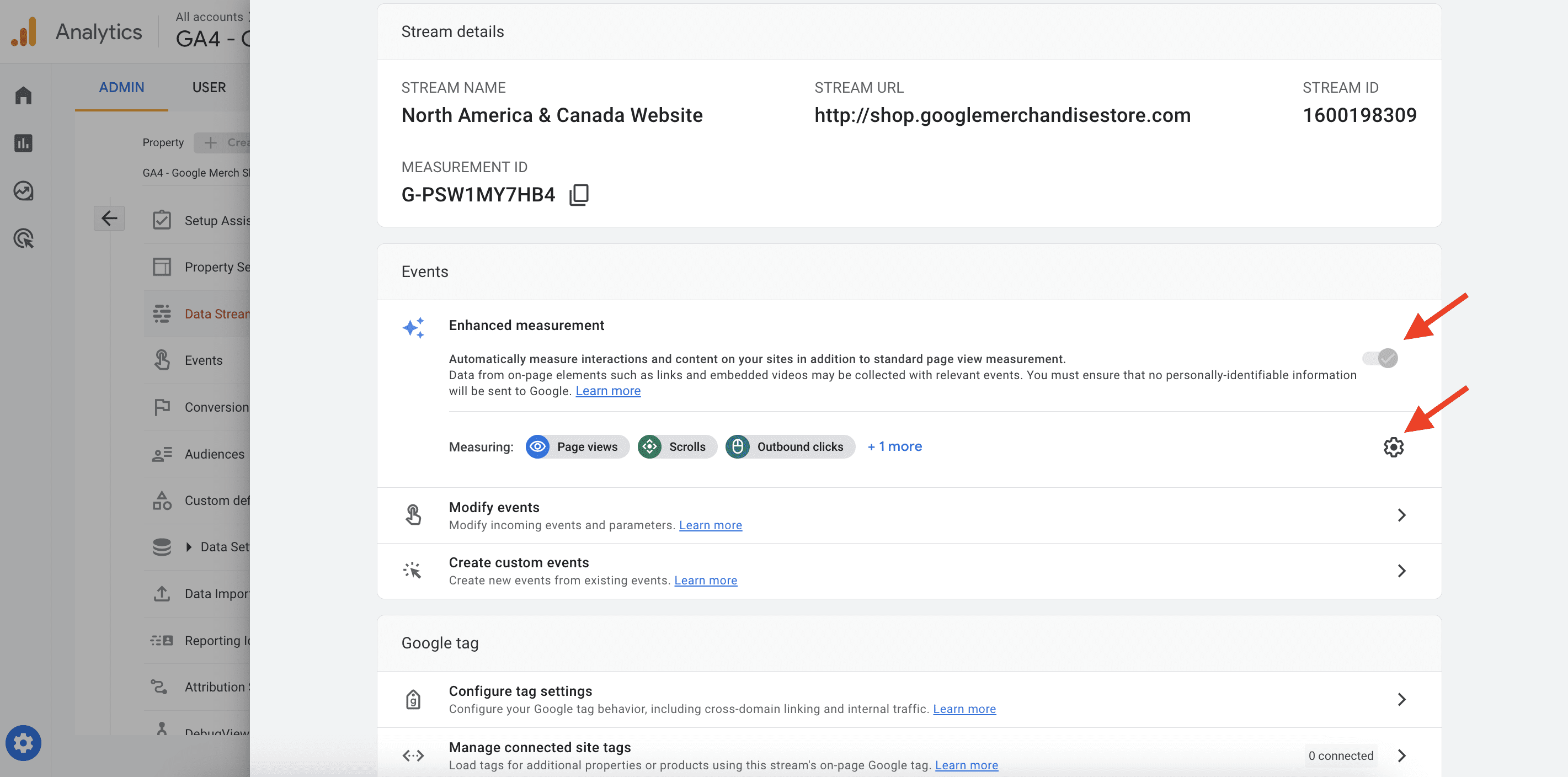

- Ensure that the Enhanced Measurement toggle is enabled. Click on the gear icon.

- Open the superior settings inside the Web page views part and allow the Web page modifications setting based mostly on browser historical past occasions. Keep in mind to save lots of the modifications. It’s additionally really helpful to disable all default tracks which might be unrelated to pageviews, as they could have an effect on accuracy.

- Open Google Tag Supervisor and allow the Preview and Debug mode.

- Navigate by means of totally different pages in your SPA web site.

- Within the Preview mode, the GTM container will begin displaying you the Historical past Change occasions.

- In the event you click on in your GA4 measurement ID subsequent to the GTM container within the preview mode, you must observe a number of Web page View occasions being despatched to GA4.

If these steps work, GA4 will have the ability to observe your SPA web site. If it doesn’t, you may have to take the next extra steps:

- Implementing the historical past change set off in GTM.

- Asking builders to activate a dataLayer.push code

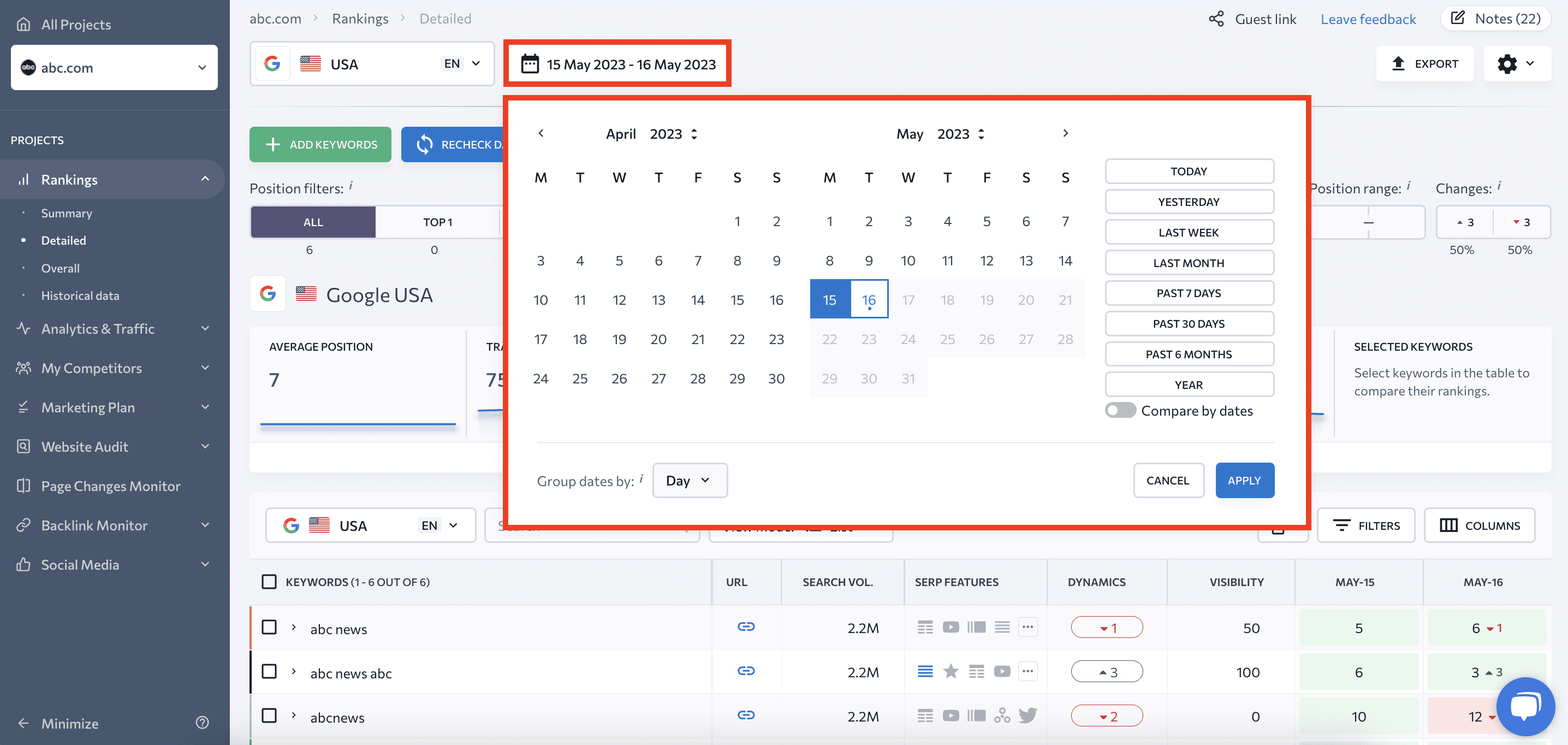

Monitor SPA with SE Rating’s Rank Tracker

One other complete monitoring software is SE Rating’s Rank Tracker. This software means that you can examine single web page functions for the key phrases you need it to rank for, and it could actually even examine them in a number of geographical places, gadgets, and languages. This software helps monitoring on in style search engines like google similar to Google, Google Cell, Yahoo!, and Bing.

To start out monitoring, it is advisable to create a mission on your web site on the SE Rating platform, add key phrases, select search engines like google, and specify opponents.

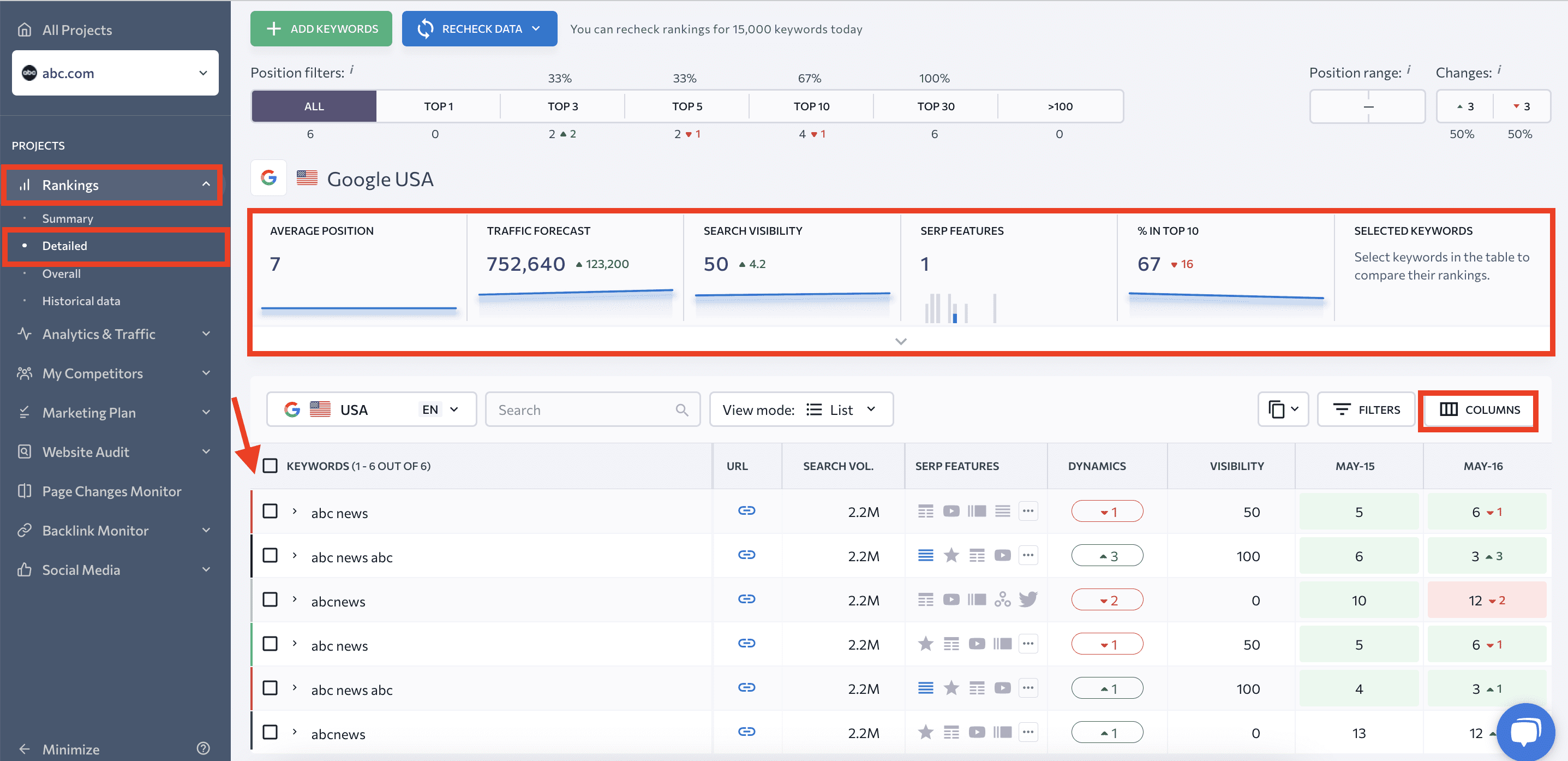

As soon as your mission is setup, go to the Rankings tab, which consists of a number of studies:

- Abstract

- Detailed

- General

- Historic Information

We’ll give attention to the default Detailed tab. It’s going to seemingly be the primary report you see after including your mission. On the prime of this part, you’ll discover your SPA’s:

- Common place

- Site visitors forecast

- Search visibility

- SERP options

- % in prime 10

- Key phrase record

The key phrase desk displayed beneath these graphs supplies data on every key phrase that your web site ranks for. It consists of particulars such because the goal URL, search quantity, SERP options, rating dynamics, and so forth. You possibly can customise the desk with extra parameters accessible within the Columns part.

The software allows you to filter your key phrases based mostly in your most popular parameters. You may also set goal URLs and tags, see rating knowledge for various dates, and even examine outcomes.

Key phrase Rank Tracker supplies you with two extra studies:

- Your web site rating knowledge: This consists of all search engines like google you have got added to the mission, which could be present in a single tab, labeled as “General”.

- Historic data: This consists of knowledge relating to the modifications in your web site rankings for the reason that baseline date. Navigate to the Historic Information tab to seek out this information.

For extra data on the right way to monitor web site positions, try our information on rank monitoring in numerous search engines like google.

Single-page utility web sites carried out proper

Now that you already know all of the ins and outs of Search engine optimisation for SPA web sites, the following step is to place concept into motion. Make your content material simply accessible to crawlers and watch as your web site shines within the eyes of search engines like google. Whereas offering guests with dynamic content material load, blasting pace, and seamless navigation, it’s additionally vital to recollect to current a static model to search engines like google. You’ll additionally need to just be sure you have an accurate sitemap, use distinct URLs as an alternative of fragment identifiers, and label totally different content material varieties with structured knowledge.

The rise of single-page experiences powered by JavaScript caters to the calls for of contemporary customers who crave fast interplay with internet content material. To take care of the UX-centered advantages of SPAs whereas reaching excessive rankings in search, builders are switching to what Airbnb’s engineer Spike Brehm calls “the onerous method”—skillfully balancing the shopper and server facets of internet growth.

[ad_2]

Source_link